The following text is provided by AMD and published verbatim.

Performance Is Everything – So How Do We Measure It?

While many factors impact the PC selection process, performance is almost always #1. It doesn’t matter how big the organization is, or which products or services it delivers, businesses buy technology to make people and processes more productive.

Even as data security concerns have gotten more attention in the past few years, performance is still the guiding force in decisionmaking. Whether putting out an RFQ or running their own internal evaluation, companies rely on specification or test metrics to figure out which PC is right for their needs.

Gone are the days when companies were mostly driven by price, settling for bulky PCs or heavy mobile devices that had “good enough” performance. Today’s critical digital foundations need every advantage for success. This means businesses are willing to invest more in performance that will pay much greater returns.

This paper will explore different techniques and strategies for evaluating performance and the pros and cons for each. Using one of the benchmark-based strategies, two modern processors will be evaluated as an example of how to use a comprehensive performance review to better understand the how the two CPUs compare.

Old School Metrics: Rust And Unreliable

Traditionally, companies have used various physical specifications, such as processor frequency and cache size, to set a necessary baseline for PC performance. Unfortunately, these are an incomplete, and often inaccurate, way to assess performance versus actual application workloads.

Using Frequency

There are two problems with using frequency as a meaningful measurement of performance.

First, two identical processors operating on the same frequency can yield dramatically different performance levels. This is due to the efficiency of underlying architectural implementation, measured in Instructions Per Clock (IPC), and is thus invisible to basic spec comparisons.

Secondly, frequency is not a constant for most modern processors. This is especially true for today’s notebook PCs, where frequency is constrained by thermal considerations. Additionally, frequencyr will vary dramatically based on everything from the task being performed to the number of cores in use.

A Closer Look At The Math

RFQs will often use processor frequency as a way to measure expected performance. It’s a very inaccurate technique that mostly persists as an artifact from the early days of the PC market, and a quick glance at a basic performance equation quickly shows us why it’s incomplete.

| CPU TIME = I * IPC * T | |

|---|---|

| I | Number of instructions in program |

| IPC | Instructions Per Clock |

| T | Clock cycle time |

While “T,” or the inverse of the processor frequency is a key factor, the “IPC” or average number of instructions per clock has an equal impact. Why?

As processors become more sophisticated with super-scaler designs, improved cache, and deep instruction pipelines, they can execute more than one instruction per clock cycle. Silicon designers considering multiple potential options often find that the best design involves a reduction in the number of clock cycles required to execute a set of instructions, rather than simply increasing the frequency of the clock.

For example, assume an application process requires a billion (1×109) instructions to complete and a particular processor has an average IPC of 3.7 and frequency of 2Ghz.

To complete this task would require 1×109 x 3.7 x 0.5 x 10-9, or 1.85 seconds

Now let’s assume a second processor, with a different architecture, operates at a frequency that is 25% higher, but with an average IPC that is fractionally higher at 4.7.

To complete this task would require 1×109 x 4.7 x 0.4 x 10-9, or 1.88 seconds

This means the second processor is 2% slower, despite having a 25% faster frequency. As this shows, while frequency can be used to predict performance of two parts with the same design, it is too simplistic to compare parts from different families.

Frequency vs. Thermals

With notebook processors especially, the idea of boost and base frequencies is an oversimplification—the actual frequency at any given time will depend on workload, power-mode, and platform thermal design. For example, the higher the boost frequency, the more heat is produced, and the thermal design of the notebook—including fan size and speed, and also the manufacturer’s design constraints for skin temperature— can mean that achieving peak boost will reduce the sustained performance over time.

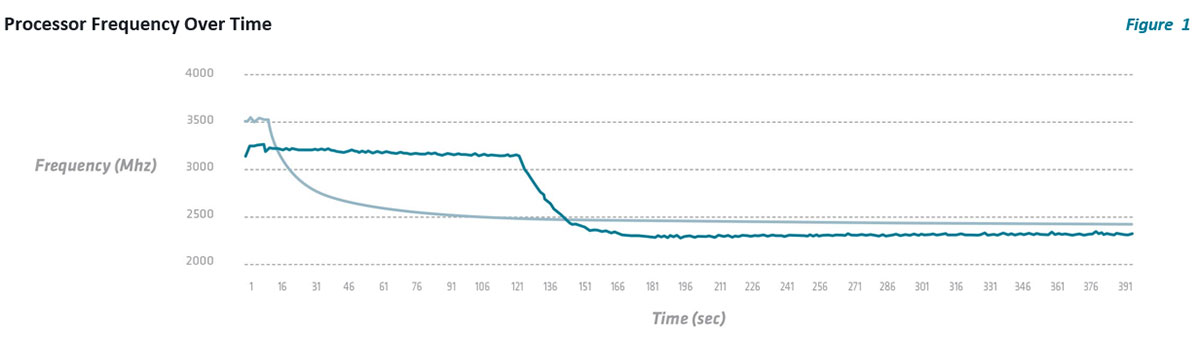

Figure 1 shows the frequency of two processors, with different designs, over time.

- The first processor has a higher boost frequency and quickly jumps to this maximum in the first few seconds. This is also true for the second processor, although its maximum is not as high.

- However, after about 15 seconds, the first processor rapidly reduces its operating frequency in response to the building thermal load, while the second processor sustains a higher frequency for minutes before throttling lower.

The total amount of work done is the area under the curve and so, for longer, more complex tasks where users often have to wait for results, the second processor with lower boost frequency quickly overtakes the first, completing more work over time. But frequency specifications alone give no indication of this real-world behavior.