And provides details on (Milan-X) 3rd Gen Epyc processors with 3D V-Cache.

AMD’s Accelerated Data Center Premiere presentation is now over. The event was all about datacentre-targeting CPUs, GPUs, and wrapped up with an update on AMD’s Exascale computing technology. Thus, the products that were put under the spotlight were the newest and most powerful Epyc processors and Instinct accelerators – aiming to provide AMD with accelerated computing leadership.

AMD 3rd Gen Epyc processors with 3D V-Cache (Milan-X)

AMD is going to pioneer the use of 3D chiplet technology in the datacentre. We have seen the chip designer talk about 3D V-Cache before, and the benefits of this technological application were demonstrated on a consumer Ryzen 9 5900X chip earlier in the year (up to a 15 per cent gaming performance uplift). Now we see that 3D chiplet technology in general, and 3D V-Cache in particular, is coming to the Epyc processor line under the codename Milan-X.

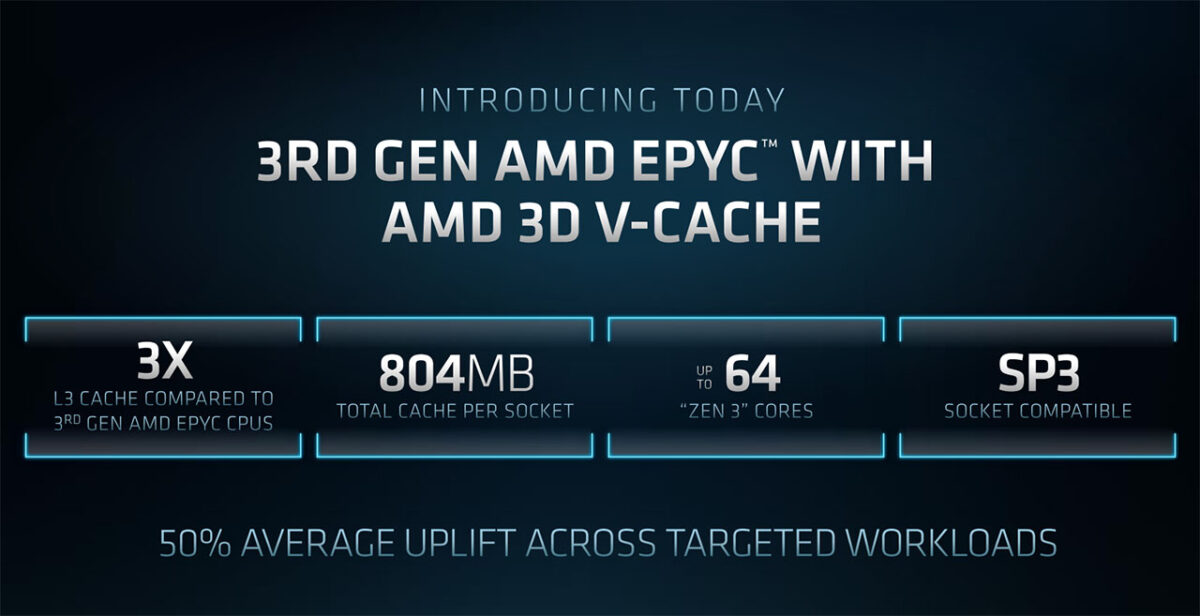

According to internal testing, the benefits of AMD 3D V-Cache can be even more dramatic in targeted datacentre workloads. These updated socket SP3 processors – need BIOS update for Milan-X, software doesn’t require patching – boasting up to 64 Zen 3 cores now come packing 3x the L3 cache of their brethren, for up to 804MB total (L2 and L3) cache per socket. The result of this carefully-concocted complementary technology is a “50 per cent average uplift across targeted workflows,” says AMD.

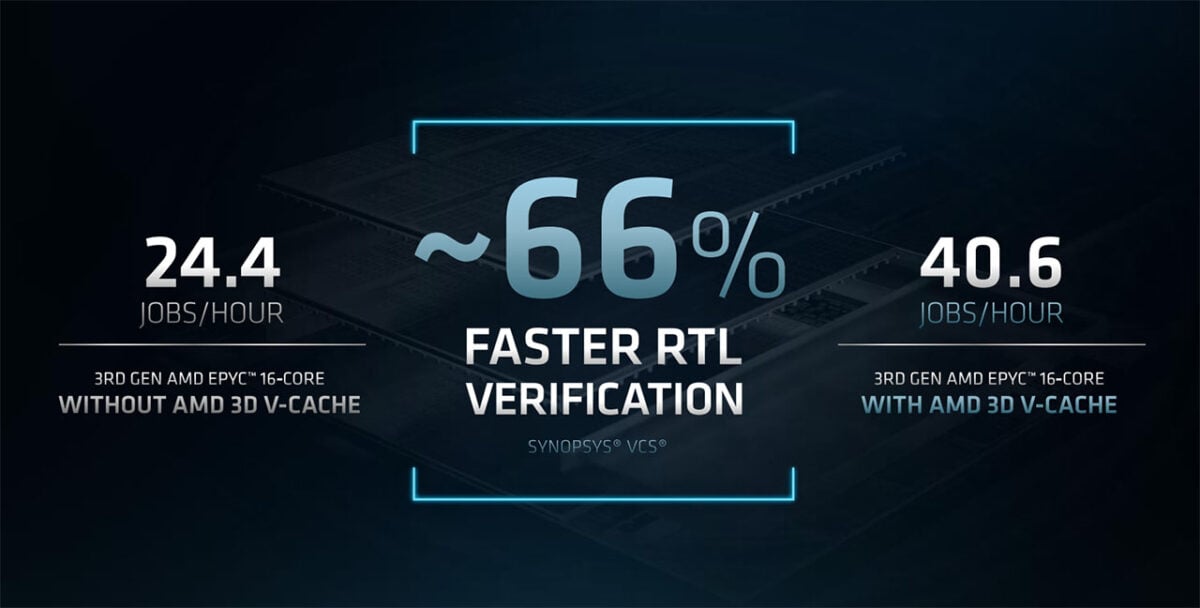

Applications which see particular benefit with these new processors include the technical computing fields such as; finite-element analysis, structural analysis, computational fluid dynamics, and electronic design automation. AMD Milan was already 30-to-40 per cent better than Intel Ice Lake in technical computing tasks highlighted by AMD. At the presentation today, Milan-X was demonstrated by AMD completing chip design verification work 66 per cent faster than Milan. Of course, this is a best-case scenario of the cache-tripling technology, and it shows AMD targeting specific workflows with eclectic chips.

In addition to the hardware, AMD boasts of its close co-operation with ISV ecosystem partners such as Altair, Ansys, Cadence, Siemens, and Synopsys – meaning fast, efficient and reliable performance.

AMD 3rd Gen Epyc processors with 3D V-Cache will become available starting Q1 next year.

As most of the readers of this news on Club386 will also be PC enthusiasts and gamers, please keep in mind that AMD intends to introduce Ryzen processors with 3D V-Cache in Q1 2022, as well.

AMD Instinct MI200 series datacentre accelerators

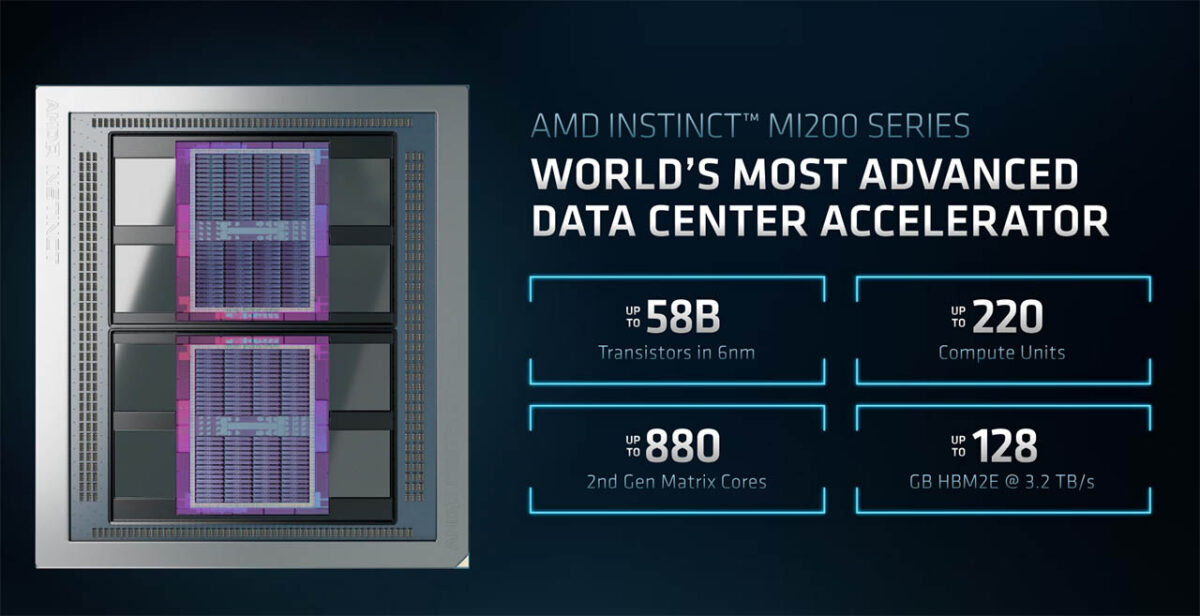

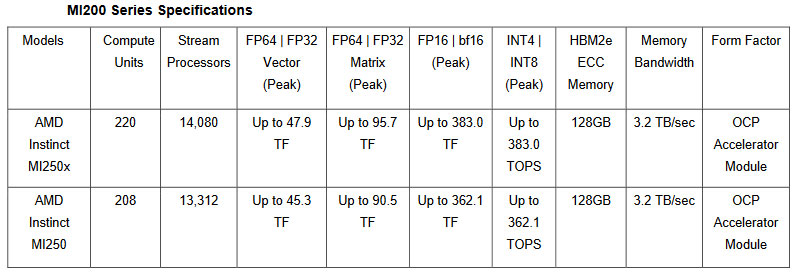

With its latest datacentre GPU-based accelerators, AMD has implemented a number of key innovations to try and cement a lead in the industry. Looking at the data and infographics, it doesn’t seem to have held back in any way, with the Instinct MI 200 series boasting the following; dual AMD CDNA2 dies, up to 58 billion transistors at 6nm, up to 220 compute units, up to 128GB of HBM2e at 3.2TB/s, and up to 880 2nd gen matrix cores.

Making all the above work in swift harmony, AMD has connected the dual CDNA2 dies using ultra high bandwidth 3rd gen Infinity Fabric interconnects with coherent CPU-to-GPU interconnects, and a 2.5D elevated fanout bridge (EFB). According to AMD, “EFB technology delivers 1.8x more cores and 2.7x higher memory bandwidth vs. AMD previous gen GPUs.”

What does all this mean to performance? AMD reckons its new Instinct MI200 series is “shattering performance barriers in HPC and AI,” and in its own tests peak performance of the MI200 put it significantly ahead of the Nvidia A100 in both HPC and AI applications and benchmarks.

AMD is introducing its Instinct MI200 series now in the OCP Accelerator Module (OAM) form factor. It will be following up with PCIe cards such as the MI210 PCIe “soon.”

AMD began wrapping up the presentation by talking about updates to important supporting technology like the ROCm Open Software Platform for GPU Compute. This will be moving to version 5.0 shortly with expanded support, optimised performance, and tweaks with developers in mind.

The 128-core monster on the horizon

Leaving some tasty morsels till the very end, however, AMD announced that its next-generation Zen 4-based Genoa Epyc processor will, in 2023, be supplemented by new chips known by the codename Bergamo. Packing up to 128 cores – up from a maximum 96 cores on Genoa – they are to be built on a 5nm Zen 4c platform, with the small-case c referring to their projected use in cloud-computing infrastructure.

Pushing more cores into a similar thermal envelope as Genoa means that AMD will need to fine-tune the voltage/frequency curve for Bergamo. Educated guesses suggest they will run more slowly to compensate for the need to power more cores. AMD further says it has optimised the caching structure to better suit cloud needs.

An intriguing product once again focussed on technology leadership in a specific market – AMD is purposely widening the stack to take advantage of growth sectors – we’ll add more details as and when we get them.