Intel has recently published an article that describes, in great length, its latest advances in path-traced light simulation and neural graphics research. Wonderful bedtime reading.

Thanks to Nvidia and its RTX line of GPUs, consumer ray-tracing techniques have been around since 2018, and Team Green’s graphics cards have improved on path tracing with every new iteration. However, it still requires a considerable amount of processing power to run at reasonable frame rates. Anything under the almighty GeForce RTX 4090 struggles to run AAA games at a stable 60fps without some form of temporal upscaling technology, because real-world light simulation, in the way it is calculated and processed today, is just that difficult to render.

Team Blue believes it can successfully integrate efficient path-tracing techniques on both current discrete graphics cards as well as integrated graphics solutions in the future. A bold claim, indeed, and something that’s got the tech space buzzing.

As such, the article in question highlights and links three new papers focusing on path-tracing optimisations that will be presented at SIGGRAPH, EGSR, and HPG by the Intel Graphics Research Organisation.

The premise is simple; these three papers showcase three new ways to optimise current path-tracing techniques in the hope of alleviating and improving GPU performance by reducing the number of calculations required to simulate light bounces. Path-tracing, as you may know, is an indirect calculation monster.

Moving on, the first two papers, presented at the EGSR and HPG shows, tackle how to more efficiently deal with ‘acceleration structures used in ray tracing to handle dynamic objects and complex geometry.’

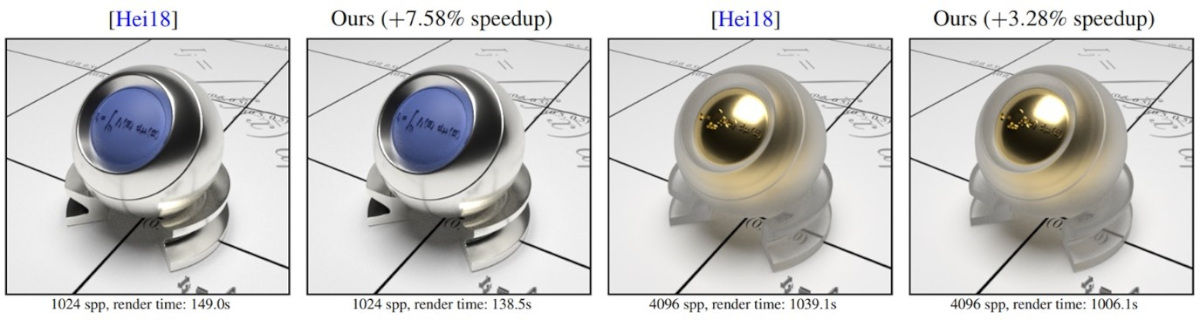

The first focuses on a new method of computing reflections on a GGX microfacet surface. GGX is, as Intel puts it, “the de facto standard material model in the game, animation, and VFX industry.” Reducing the materials used results in a hemispherical mirror that is much easier to simulate.

The second paper, meanwhile, details a faster and more stable way of rendering ‘glinty’ surfaces in a 3D environment. Intel says the type of material contained in glint reflections is underused, far outweighing its computational cost. The main takeaway is that by identifying and reducing the number of glitter or glint(s) per pixel of a virtual camera, the GPU needs to only render the correct amount of visible glitter to the eye, ergo reducing processing power.

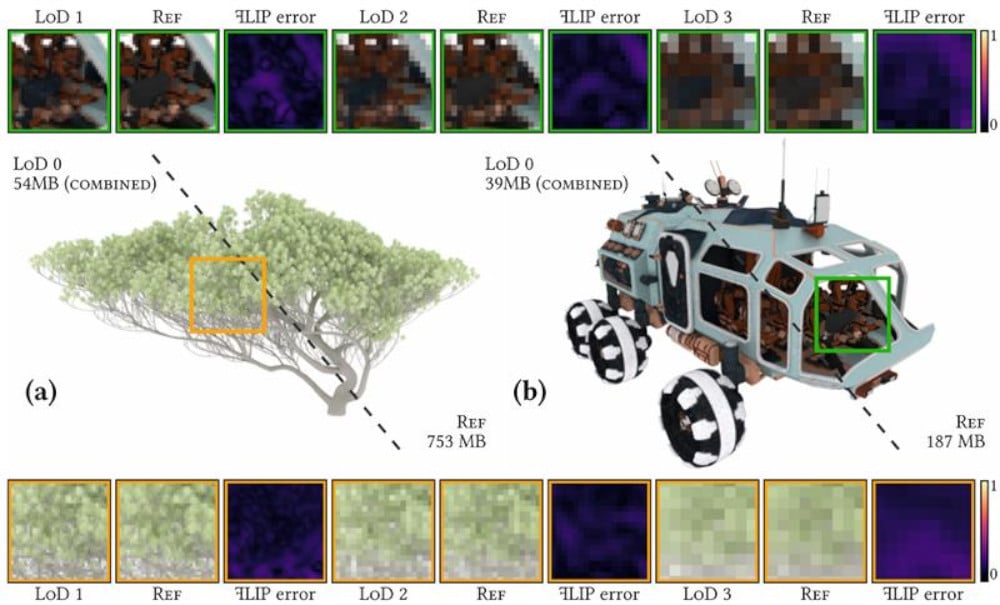

Finally, the last work at SIGGRAPH 2023 highlights connecting both neural rendering and real-time path tracing into one unified framework. Intel goes on to say “With our neural level of detail representations, we achieve compression rates of 70–95 per cent compared to classic source representations, while also improving quality over previous work, at interactive to real-time framerates with increasing distance at which the LoD technique is applied.”

By combining these three core aspects, Intel hopes to make ray tracing and photo-realistic rendering possible on more affordable GPUs… outside of the Nvidia stratosphere, as well as providing a step toward real-time performance on integrated GPUs in the near future. In essence, ray-tracing performance can only be improved enough to be applicable across millions of GPUs through scene setup smarts rather than a brute-force approach.