The fourth quarter of 2022 will capture the imagination of any PC enthusiast. AMD, Intel and Nvidia are all bringing new GPUs to the scene, we have cutting-edge CPU launches, and when you throw in a raft of new chipsets, DDR5 memory and some high-speed storage, it’s a holiday season quite like no other.

A cost-of-living crisis may understandably put a dampener on new-build plans, yet if you’re contemplating taking the plunge, or just happen to enjoy watching it all unfold, it is fascinating to see industry heavyweights bring out the big guns. After a couple of subdued years and disruptive semiconductor shortages, gloves are officially off and the best graphics cards are back.

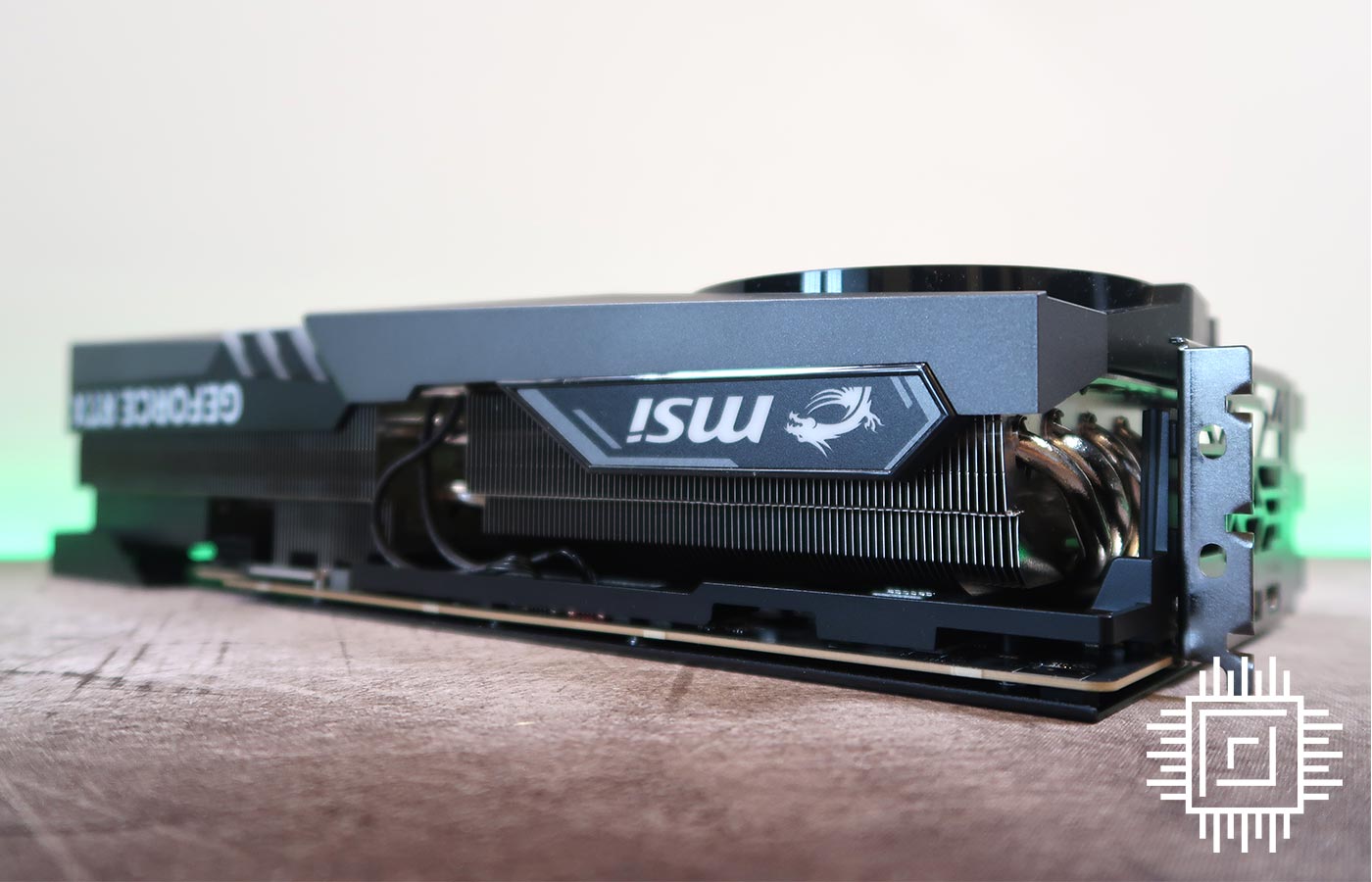

MSI GeForce RTX 4090 Gaming X Trio

£1,999

Pros

- Makes 4K120 a reality

- DLSS 3.0 has promise

- Keeps quiet at all times

- Single power connector

- 24GB memory

Cons

- 480W power limit

- Massive footprint

Club386 may earn an affiliate commission when you purchase products through links on our site.

How we test and review products.

Few have delivered a punch as fierce as Nvidia, whose GeForce RTX 4090 surprised onlookers by not just beating up on existing solutions, but by KO’ing benchmark records with an Ada Lovelace architecture designed to ring the bell on any other GPUs who dare step into the ring.

Beastly in more ways than one, Nvidia’s top-end part carries a preposterous 76bn transistors, and resulting partner cards mirror that sense of magnitude. We’ve all seen the memes, yet with room in the chassis, there’s merit to a card that’s big, mega-fast, and also supremely cool and quiet. It is no wonder boards have sold out immediately.

When the furore inevitably dies down – it could be weeks or months before stock levels settle – enthusiasts will have a long list of partner cards to choose from. MSI is vying for attention with a selection of models spread across the established Gaming and Suprim product lines.

The former has historically been well received, but there’s no escaping the fact that Suprim offers another level of refinement and superior aesthetics. That being the case, we had hoped MSI would go ultra-aggressive with Gaming Series pricing, but the manufacturer evidently sees no reason to do so.

Our review card, an RTX 4090 Gaming X Trio, carries a UK MSRP of £1,999, which is a little too close to the £2,080 Suprim X for comfort. Whichever route you take, a near-20 per cent premium over the Founders Edition is hard to overlook, and that gap is only widening as retailers attach further premiums to what is sought-after stock.

Don’t expect a bargain – gamers will do well to find such a card the right side of £2,000 before year end – but if you do manage to nab one, we suspect you’ll be both pleased and taken aback by the sheer scale of it. MSI’s latest incarnation of Gaming X Trio measures 337mm x 140mm x 77mm, making it the largest RTX 4090 to pass through the Club386 labs thus far. The four-slot monster is also the heaviest, weighing almost 2.2kg; more for your money, we suppose.

Despite the heft, Gaming X Trio is surprisingly conservative in other areas. A factory boost block of 2,595MHz represents a mere 75Hz improvement over stock frequencies, the large 24GB pool of GDDR6X memory operates at a default 21Gbps, and while many custom boards adopt vapour-chamber cooling, MSI opts for a good ol’ fashioned copper contact plate and eight supporting heatpipes. Suprim, in case you wondered, has a vapor chamber and 10 heatpipes.

There are perceived limitations in the power department, too. Power phases are reduced from 26+4 on Suprim to 18+4 on Gaming models, and if you don’t yet have a shiny, new ATX 3.0 power supply, note that the bundled 16-pin 12VHPWR power adapter is downgraded from four-way to three. Three eight-pin PCIe connectors align with Nvidia’s minimum requirement, while a fourth, taking auxiliary power from 450W to 600W, serves to suit extreme overclockers.

Do such barriers detract from Gaming X Trio? Probably not. Real-world temperatures, as we’ll demonstrate a little later, are suitably cool, and the card’s BIOS forces a hard limit of 480W when overclocking, meaning a fourth power cable would do little other than add clutter.

Display outputs adhere to Nvidia’s standard selection of three DisplayPort 1.4a and one HDMI 2.1, and whereas other partners have opted to go to all sorts of extremes, MSI keeps some semblance of sanity. The recommended power supply is a mere 850W, and RGB lighting, limited to the MSI logo and Adidas-like stripes, is relatively subdued.

A full-metal backplate and anti-bending plate sandwich the PCB to provide decent rigidity, and as expected from a Gaming X Trio design, all three fans switch off at low load. We sometimes see partners fall short with 0dB implementation – the abrupt ramp-up of fans often defeats the purpose – but MSI thankfully makes no such mistakes. Fans remain off until core temperature exceeds 60°C, and when kicking into action, they do so in smooth and composed fashion; the transition is barely noticeable.

Dual-BIOS functionality allows users to switch between Gaming and Silent cooling modes, yet feel as though Gaming X Trio is beginning to fall behind in the aesthetic department. Those fan cables you see between fin-stacks really should be better concealed, and the support bracket included in the bundle isn’t the best. Installation is awkward, taking up six out of seven available slots in our test platform, and yet the bracket itself has a tendency to sag. We much prefer the vertical support stand provided with, you guessed it, the Suprim.

Second-class citizen though it may be, Gaming X Trio still offers frightening gaming performance, and for those who favour stable speeds and whisper-quiet acoustics over superfluous RGB, there’s plenty to like. Benchmarks soon, but first, a recap on all things Ada Lovelace.

Architecture

Those of you craving benchmark numbers can skip right ahead – they’re astonishing in many ways – but for the geeks among us, let’s begin with all that’s happening beneath RTX 40 Series’ hood.

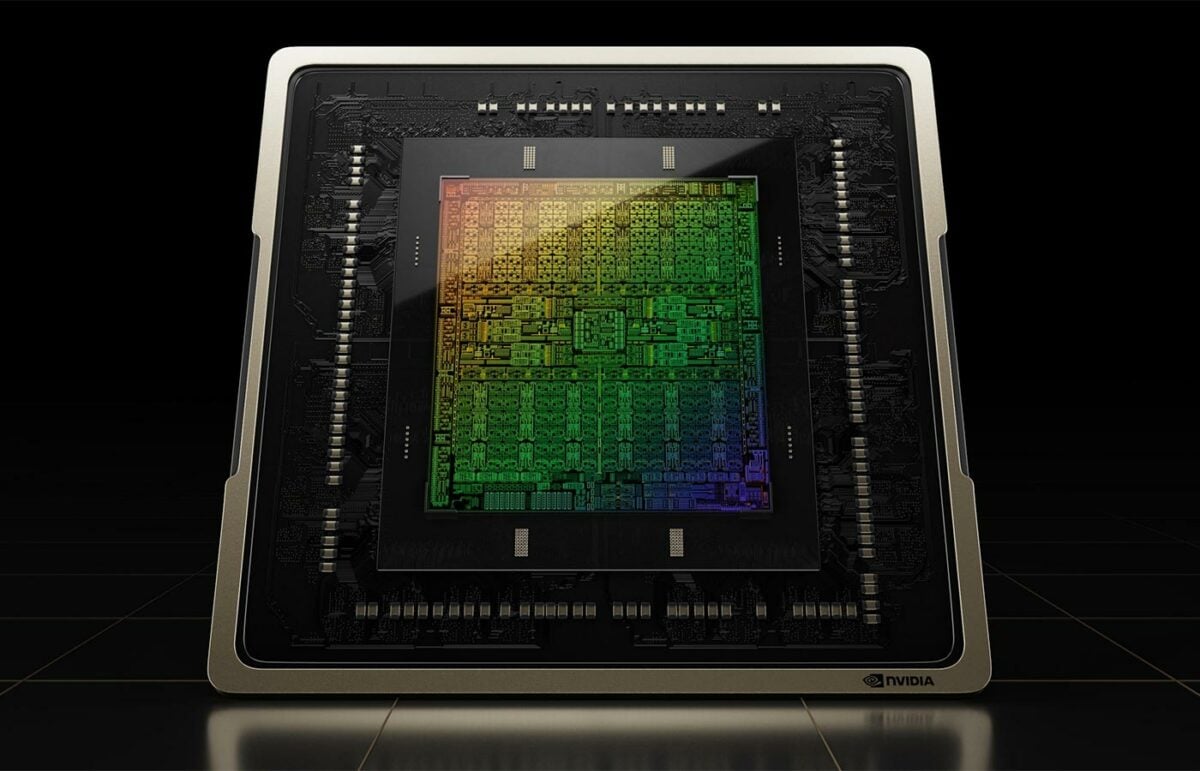

The full might of third-generation RTX architecture, codenamed Ada Lovelace, visualised as the AD102 GPU. It’s quite something, isn’t it?

What you’re looking at is one of the most complex consumer GPUs to date. Nvidia’s 608mm2 die, fabricated on a TSMC 4N process, packs a scarcely believable 76.3 billion transistors. Putting that figure into perspective, the best GeForce chip of the previous 8nm generation, GA102, measures 628mm2 yet accommodates a puny 28.3 billion transistors. Nvidia has effectively jumped two nodes in one fell swoop, as Samsung’s 8nm process is more akin to 10nm from other foundry manufacturers.

We’ve gone from flyweight to heavyweight in the space of a generation, and a 170 per cent increase in transistor count naturally bodes well for specs. A full-fat die is home to 12 graphics processing clusters (GPCs), each sporting a dozen streaming multiprocessors (SMs), six texture processing clusters (TPCs) and 16 render output units (ROPs). Getting into the nitty-gritty of the block diagram, each SM carries 128 CUDA cores, four Tensor cores, one RT core and four texture units.

All told, Ada presents a staggering 18,432 CUDA cores in truest form, representing a greater than 70 per cent hike over last-gen champion, RTX 3090 Ti. Plenty of promise, yet to the frustration of performance purists, Nvidia chooses not to unleash the full might of Ada in this first wave. The initial trio of GPUs shapes up as follows:

| GeForce | RTX 4090 | RTX 4080 16GB | 12GB | RTX 3090 Ti | RTX 3080 Ti | RTX 3080 12GB |

|---|---|---|---|---|---|---|

| Launch date | Oct 2022 | Nov 2022 | Mar 2022 | Jun 2021 | Jan 2022 | |

| Codename | AD102 | AD103 | GA102 | GA102 | GA102 | |

| Architecture | Ada Lovelace | Ada Lovelace | Ampere | Ampere | Ampere | |

| Process (nm) | 4 | 4 | 8 | 8 | 8 | |

| Transistors (bn) | 76.3 | 45.9 | 28.3 | 28.3 | 28.3 | |

| Die size (mm2) | 608.5 | 378.6 | 628.4 | 628.4 | 628.4 | |

| SMs | 128 of 144 | 76 of 80 | 84 of 84 | 80 of 84 | 70 of 84 | |

| CUDA cores | 16,384 | 9,728 | 10,752 | 10,240 | 8,960 | |

| Boost clock (MHz) | 2,520 | 2,505 | 1,860 | 1,665 | 1,710 | |

| Peak FP32 TFLOPS | 82.6 | 48.7 | 40.0 | 34.1 | 30.6 | |

| RT cores | 128 | 76 | 84 | 80 | 70 | |

| RT TFLOPS | 191.0 | 112.7 | 78.1 | 66.6 | 59.9 | |

| Tensor cores | 512 | 304 | 336 | 320 | 280 | |

| ROPs | 176 | 112 | 112 | 112 | 96 | |

| Texture units | 512 | 304 | 336 | 320 | 280 | |

| Memory size (GB) | 24 | 16 | 24 | 12 | 12 | |

| Memory type | GDDR6X | GDDR6X | GDDR6X | GDDR6X | GDDR6X | |

| Memory bus (bits) | 384 | 256 | 384 | 384 | 384 | |

| Memory clock (Gbps) | 21 | 22.4 | 21 | 19 | 19 | |

| Bandwidth (GB/s) | 1,008 | 717 | 1,008 | 912 | 912 | |

| L1 cache (MB) | 16 | 9.5 | 10.5 | 10 | 8.8 | |

| L2 cache (MB) | 72 | 64 | 6 | 6 | 6 | |

| Power (watts) | 450 | 320 | 450 | 350 | 350 | |

| Launch MSRP ($) | 1,599 | 1,199 | 1,999 | 1,199 | 799 |

Leaving scope for a fabled RTX 4090 Ti, inaugural RTX 4090 disables a single GPC, enabling 128 of 144 possible SMs. Resulting figures of 16,384 CUDA cores, 128 RT cores and 512 Tensor cores remain mighty by comparison, and frequency headroom on the 4nm process is hugely impressive, with Nvidia specifying a 2.5GHz boost clock for the flagship product. It won’t have escaped your attention that peak teraflops have more than doubled, from 40 on RTX 3090 Ti to 82.6 on RTX 4090.

The thought of a $1,999 RTX 4090 Ti waiting in the wings will rankle die-hard gamers wanting the absolute best on day one, however if you happen to be a PCMR loyalist, take pleasure in the fact that Xbox Series X produces just 12.1 teraflops. PlayStation 5, you ask? Pffft, a mere 10.3 teraflops.

The thought of a $1,999 RTX 4090 Ti waiting in the wings will rankle die-hard gamers

Front-end RTX 40 Series specifications are eye-opening, but the back end is noticeably less revolutionary, where a familiar 24GB of GDDR6X memory operates at 21Gbps, providing 1,008GB/s of bandwidth. The needle hasn’t moved – Nvidia has opted against Micron’s quicker 24Gbps chips – however the load on memory has softened with a significant bump in on-chip cache.

RTX 4090 carries 16MB of L1 and 72MB of L2. We’ve previously seen AMD attach as much as 128MB Infinity Cache on Radeon graphics cards, and though Nvidia doesn’t detail data rates or clock cycles, a greater than 5x increase in cache between generations reduces the need to routinely spool out to memory, raising performance and reducing latency.

Heard rumours of RTX 40 Series requiring a nuclear reactor to function? Such reports are wide of the mark. RTX 4090 maintains the same 450W TGP as RTX 3090 Ti, while second- and third-tier Ada Lovelace solutions scale down as low as 285W. 450W is still significant, and those who’ve been around the GPU block will remember that 250W was considered the upper limit not so long ago. Unlike RTX 3090 Ti, however, RTX 4090’s sheer grunt is such that performance-per-watt is much improved; we’re promised double the performance in the same power budget as last generation.

Looking across to other Ada Lovelace GPUs, RTX 4080 16GB will follow in November, while the controversial RTX 4080 12GB has been canned by Nvidia following criticism from gamers and reviewers alike. The third-rung 40 Series card, built on AD104, would have reduced core count from 9,728 to 7,680, but whether or not that product is now released under a new name – RTX 4070, anyone? – or banished entirely remains to be seen.

Pricing will ultimately continue to grate PC users who feel squeezed out of high-performance gaming, and though there’s little chance of mining-fuelled demand this time around, market conditions and rampant inflation aren’t conducive to high-end GPU bargains. While we’re hopeful Intel Arc and AMD RDNA3 will restore competition in the mid-range, we don’t expect any serious challengers to RTX 4090.

Outside of core specifications, it is worth knowing display outputs remain tied to HDMI 2.1 and DisplayPort 1.4a – DisplayPort 2.0 hasn’t made the cut – and PCIe Gen 4 continues as the preferred interface. There’s no urgency to switch to Gen 5, says Nvidia, as even RTX 4090 can’t saturate the older standard. Finally, NVLink is conspicuous by its absence; SLI is nowhere to be seen on any RTX 40 Series product announced thus far, signalling multi-GPU setups are well and truly dead.

Ada Optimisations

While a shift to a smaller node affords more transistor firepower, such a move typically precludes sweeping changes to architecture. Optimisations and resourcefulness are order of the day, and the huge computational demands of raytracing are such that raw horsepower derived from a 3x increase in transistor budget isn’t enough; something else is needed, and Ada Lovelace brings a few neat tricks to the table.

Nvidia often refers to raytracing as a relatively new technology, stressing that good ol’ fashioned rasterisation has been through wave after wave of optimisation, and such refinement is actively being engineered for RT and Tensor cores. There’s plenty of opportunity where low-hanging fruit is yet to be picked.

Shader Execution Reordering

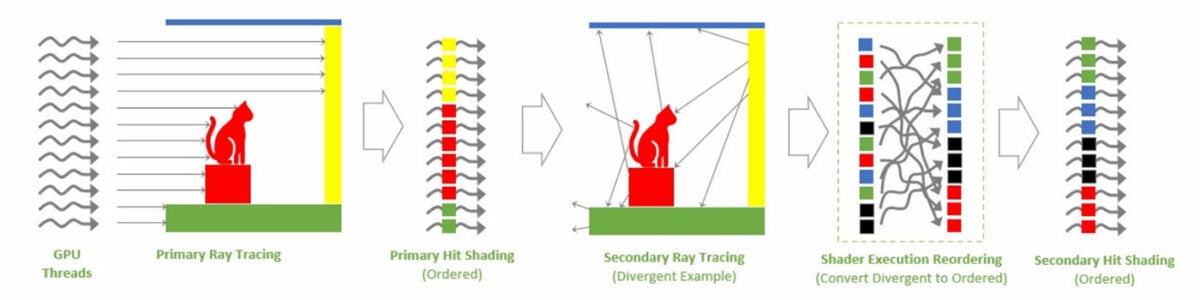

Shaders have been running efficiently for years, whereby one instruction is executed in parallel across multiple threads. You may know it as SIMT.

Raytracing, however, throws a spanner in those smooth works, as while pixels in a rasterised triangle lend themselves to running concurrently, keeping all lanes occupied, secondary rays are divergent by nature and the scattergun approach of hitting different areas of a scene leads to massive inefficiency through idle lanes.

Ada’s fix, dubbed Shader Execution Reordering (SER), is a new stage in the raytracing pipeline tasked with scanning individual rays on the fly and grouping them together. The result, going by Nvidia’s internal numbers, is a 2x improvement in raytracing performance in scenes with high levels of divergence.

Nvidia portentously claims SER is “as big an innovation as out-of-order execution was for CPUs.” A bold statement and there is a proviso in that Shader Execution Reordering is an extension of Microsoft’s DXR APIs, meaning it us up to developers to implement and optimise SER in games.

There’s no harm in having the tools, mind, and Nvidia is quickly discovering that what works for rasterisation can evidently be made to work for RT.

Upgraded RT Cores

In the rasterisation world, geometry bottlenecks are alleviated through mesh shaders. In a similar vein, displaced micro-meshes aim to echo such improvements in raytracing.

The era of brute-force graphics rendering is over

Bryan Catanzaro, Nvidia VP of applied deep learning research

With Ampere, the Bounding Volume Hierarchy (BVH) was forced to contain every single triangle in the scene, ready for the RT core to sample. Ada, in contrast, can evaluate meshes within the RT core, identifying a base triangle prior to tessellation in an effort to drastically reduce storage requirements.

A smaller, compressed BVH has the potential to enable greater detail in raytraced scenes with less impact on memory. Having to insert only the base triangles, BVH build times are improved by an order of magnitude and data sizes shrink significantly, helping reduce CPU overhead.

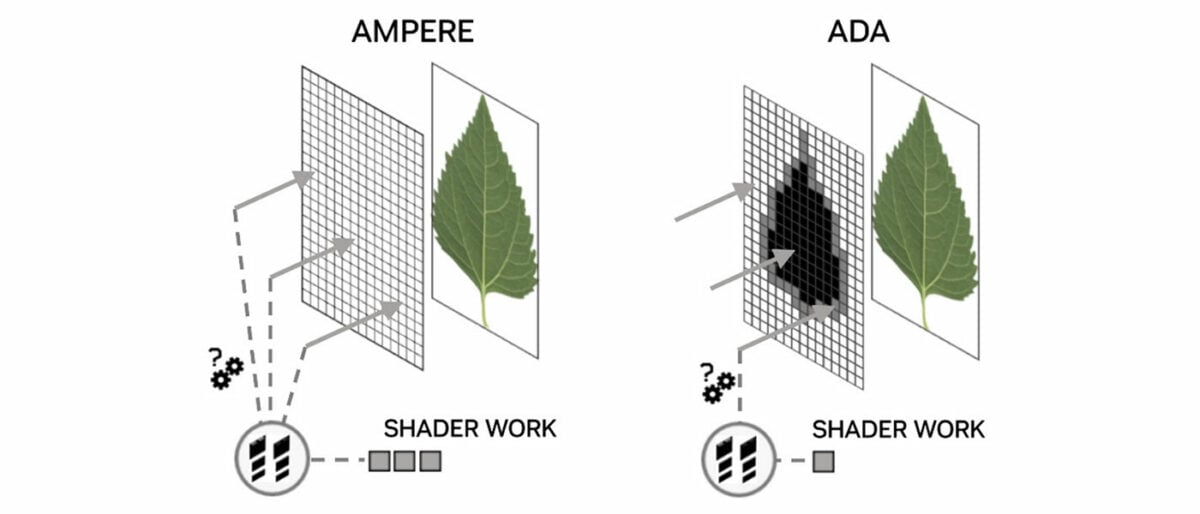

The sheer complexity of raytracing is such that eliminating unnecessary shader work has never been more important. To that end, an Opacity Micromap Engine has also been added to Ada’s RT core to reduce the amount of information going back and forth to shaders.

In the common leaf example, developers place the texture of foliage within a rectangle and use opaque polygons to determine the leaf’s position. A way to construct entire trees efficiently, yet with Ampere the RT core lacked this basic ability, with all rays passed back to the shader to determine which areas are opaque, transparent, or unknown. Ada’s Opacity Micromap Engine can identify all the opaque and transparent polygons without invoking any shader code, resulting in 2x faster alpha traversal performance in certain applications.

These two new hardware units make the third-generation RT core more capable than ever before – TFLOPS per RT core has risen by ~65 per cent between generations – yet all this isn’t enough to back up Nvidia’s claims of Ada Lovelace delivering up to 4x the performance of the previous generation. For that, Team Green continues to rely on AI.

DLSS 3

Since 2019, Deep Learning Super Sampling has played a vital role in GeForce GPU development. Nvidia’s commitment to the tech is best expressed by Bryan Catanzaro, VP of applied deep learning research, who states with no uncertainty that “the era of brute-force graphics rendering is over.”

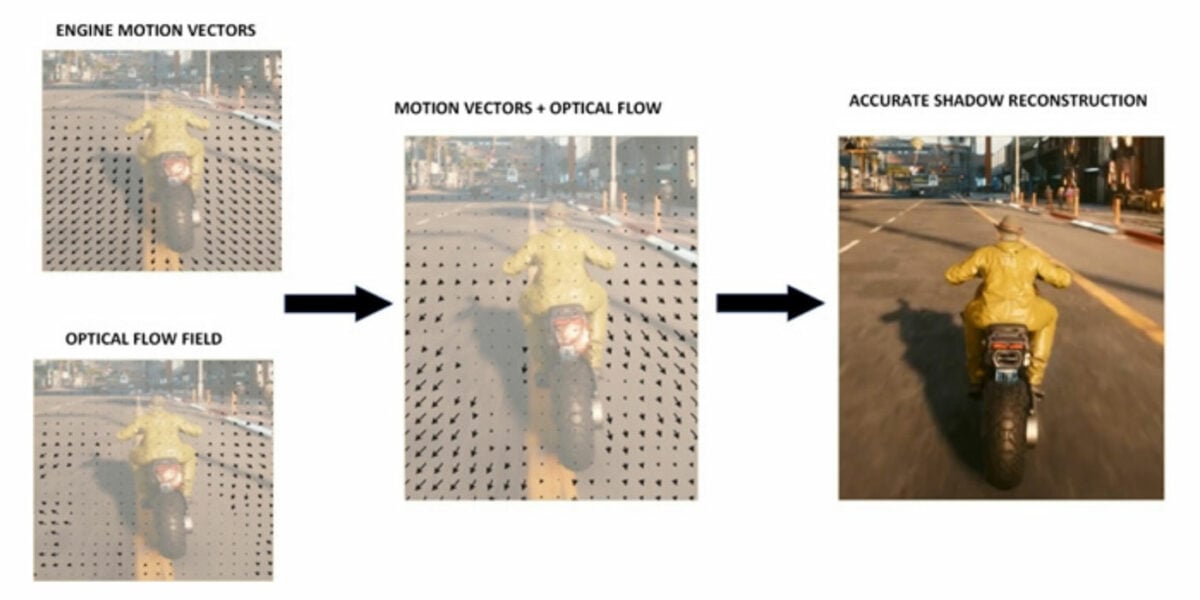

Third-generation DLSS, deemed a “total revolution in neural graphics,” expands upon DLSS Super Resolution’s AI-trained upscaling by employing optical flow estimation to generate entire frames. Through a combination of DLSS Super Resolution and DLSS Frame Generation, Nvidia reckons DLSS 3 can now reconstruct seven-eighths of a game’s total displayed pixels, to dramatically increase performance and smoothness.

Generating so much on-screen content without invoking the shader pipeline would have been unthinkable just a few years ago. It is a remarkable change of direction, but those magic extra frames aren’t conjured from thin air. DLSS 3 takes four inputs – two sequential in-game frames, an optical flow field and engine data such as motion vectors and depth buffers – to create and insert synthesised frames between working frames.

In order to capture the required information, Ada’s Optical Flow Accelerator is capable of up to 300 TeraOPS (TOPS) of optical-flow work, and that 2x speed increase over Ampere is viewed as vital in generating accurate frames without artifacts.

The real-world benefit of AI-generated frames is most keenly felt in games that are CPU bound, where DLSS Super Resolution can typically do little to help. Nvidia’s preferred example is Microsoft Flight Simulator, whose vast draw distances inevitably force a CPU bottleneck. Internal data suggests DLSS 3 Frame Generation can boost Flight Sim performance by as much as 2x.

Do note, also, that Frame Generation and Super Resolution can be implemented independently by developers. In an ideal world, gamers will have the choice of turning the former on/off, while being able to adjust the latter via a choice of quality settings.

More demanding AI workloads naturally warrant faster Tensor Cores, and Ada obliges by imbuing the FP8 Transformer Engine from HPC-optimised Hopper. Peak FP16 Tensor teraflops performance is already doubled from 320 on Ampere to 661 on Ada, but with added support for FP8, RTX 4090 can deliver a theoretical 1.3 petaflops of Tensor processing.

Plenty of bombast, yet won’t such processing result in an unwanted hike in latency? Such concerns are genuine; Nvidia has taken the decision to make Reflex a mandatory requirement for DLSS 3 implementation.

Designed to bypass the traditional render queue, Reflex synchronises CPU and GPU workloads for optimal responsiveness and up to a 2x reduction in latency. Ada optimisations and in particular Reflex are key in keeping DLSS 3 latency down to DLSS 2 levels, but as with so much that is DLSS related, success is predicated on the assumption developers will jump through the relevant hoops. In this case, Reflex markers must be added to code, allowing the game engine to feed back the data required to coordinate both CPU and GPU.

Given the often-sketchy state of PC game development, gamers are right to be cautious when the onus is placed in the hands of devs, and there is another caveat in that DLSS tech is becoming increasingly fragmented between generations.

DLSS 3 now represents a superset of three core technologies: Frame Generation (exclusive to RTX 40 Series), Super Resolution (RTX 20/30/40 Series), and Reflex (any GeForce GPU since the 900 Series). Nvidia has no immediate plans to backport Frame Generation to slower Ampere cards.

8th Generation NVENC

Last but not least, Ada Lovelace is wise not to overlook the soaring popularity of game streaming, both during and after the pandemic.

Building upon Ampere’s support for AV1 decoding, Ada adds hardware encoding, improving H.264 efficiency to the tune of 40 per cent. This, says Nvidia, allows streamers to bump their stream resolution to 1440p while maintaining the same bitrate.

AV1 support also bodes well for professional apps – DaVinci Resolve is among the first to announce compatibility – and Nvidia extends this potential on high-end RTX 40 Series GPUs by ensuring all three launch models include dual 8th Gen NVENC encoders (enabling 8K60 capture and 2x quicker exports) as well as a 5th Gen NVDEC decoder as standard.

Performance

An entirely new architecture needs to be examined from the ground-up. All of our comparison GPUs are tested from scratch on our enduring Ryzen 9 5950X test platform. The Asus ROG Crosshair VIII Formula motherboard has been updated to the latest 4201 BIOS, Windows 11 is up to version 22H2, and we’ve used the most recent Nvidia and AMD drivers at the time of writing.

Perusing log files reveals the MSI card settling at around 2.75GHz during real-world use. A smidgen slower than rival partner cards, but a few megahertz here or there don’t change the underlying fact; RTX 4090, in any shape or form, is insanely quick.

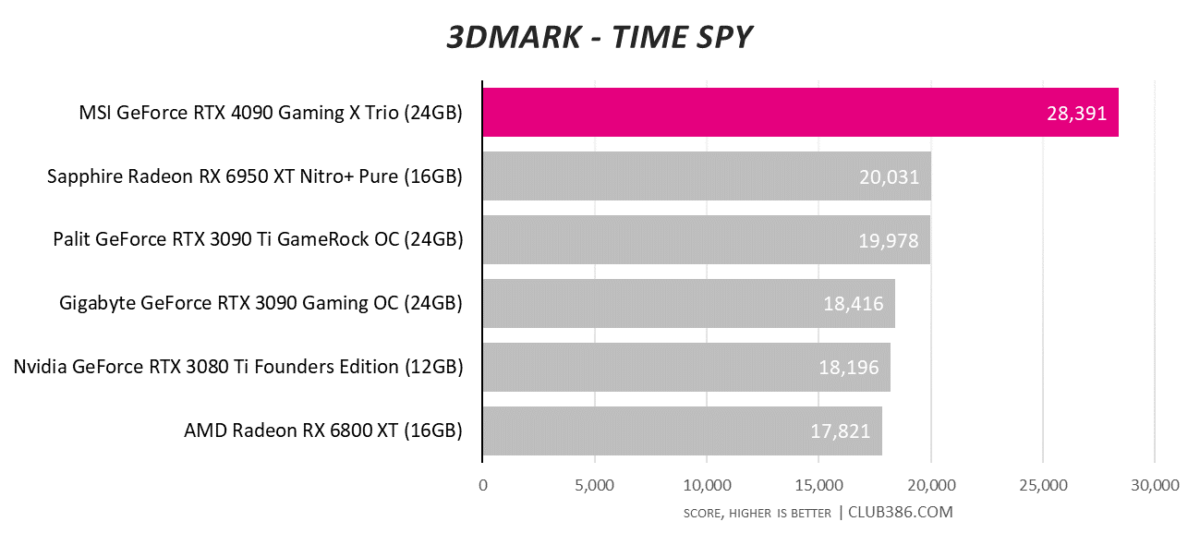

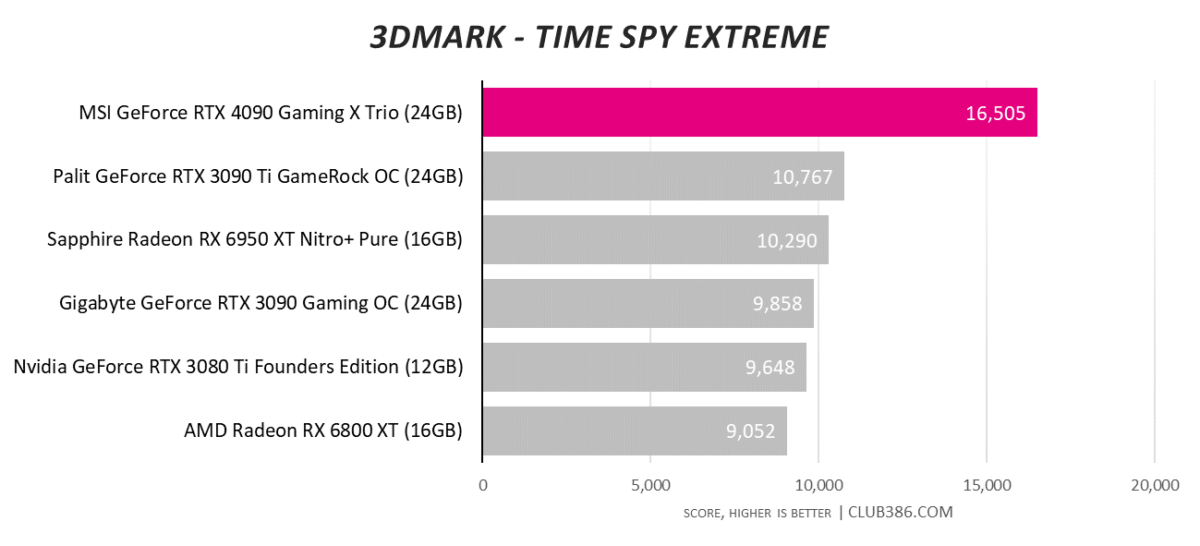

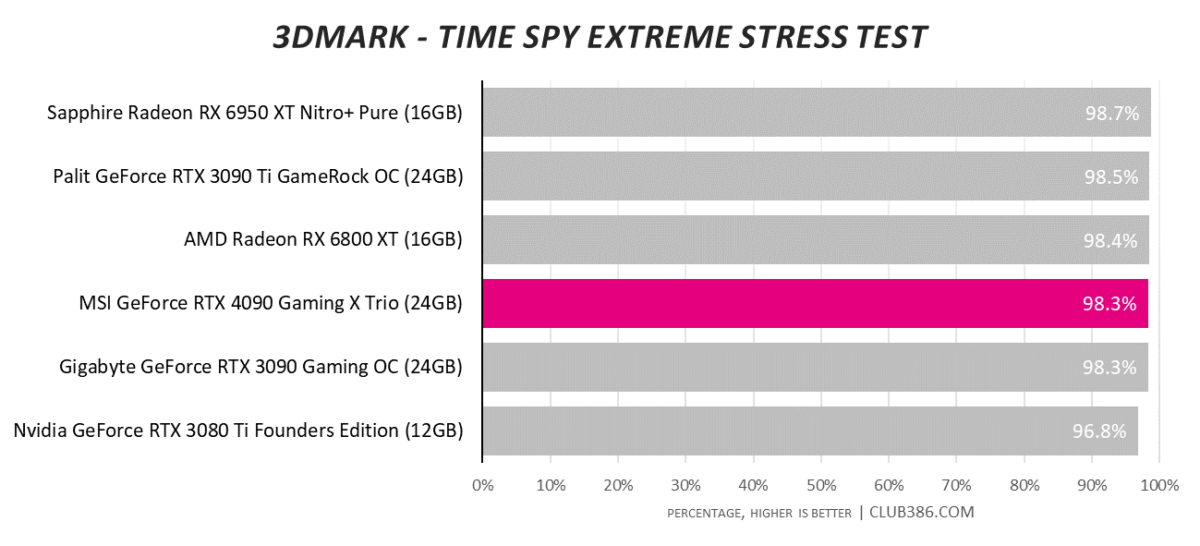

Nothing wrong with MSI’s gargantuan cooler, either, as Gaming X Trio maintains stable frequency throughout a torturous Time Spy Extreme Stress Test.

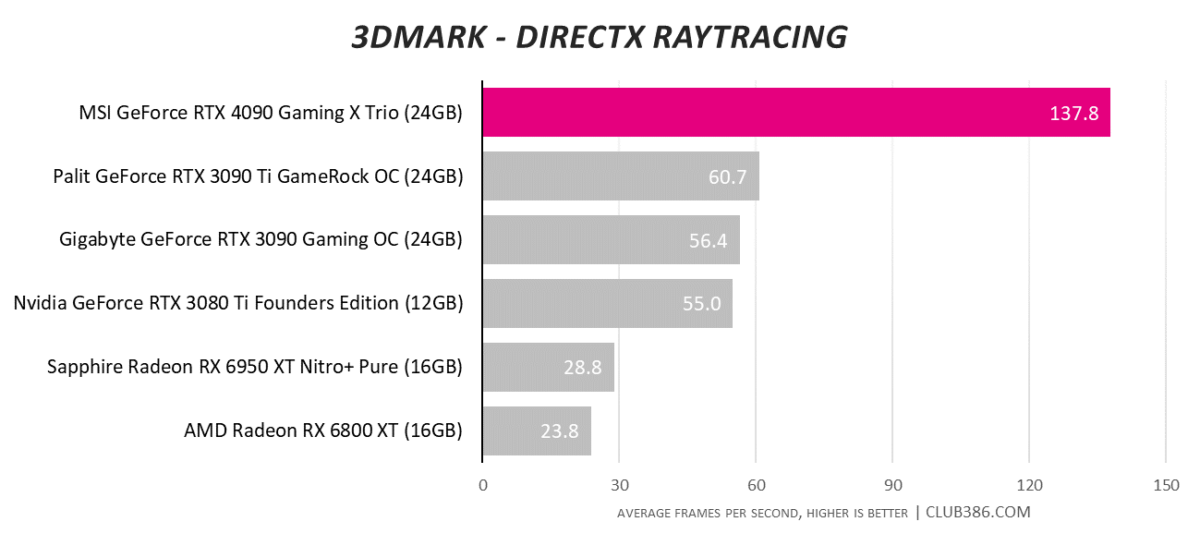

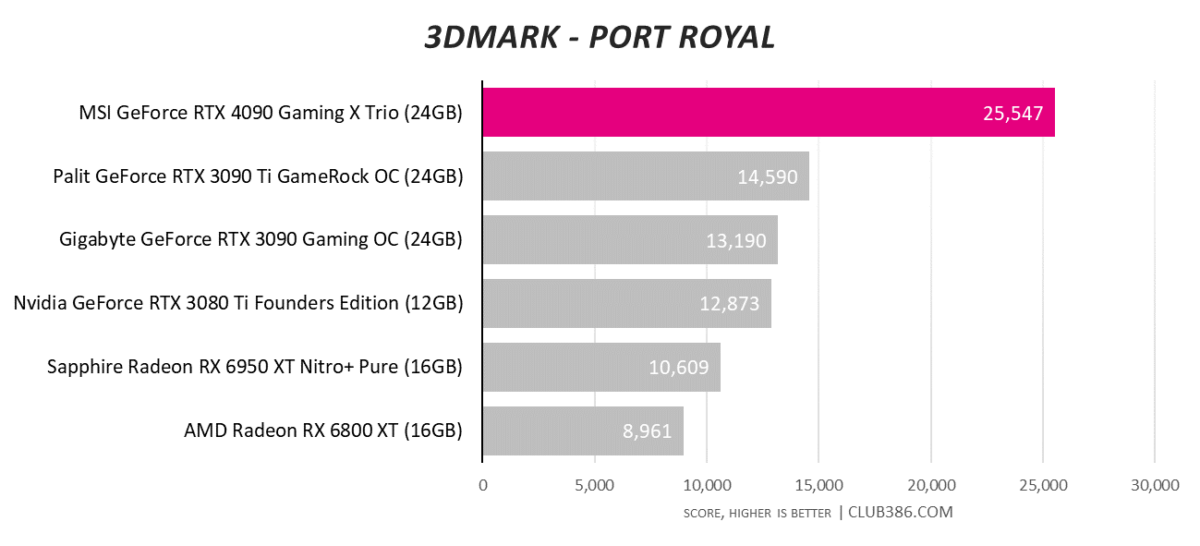

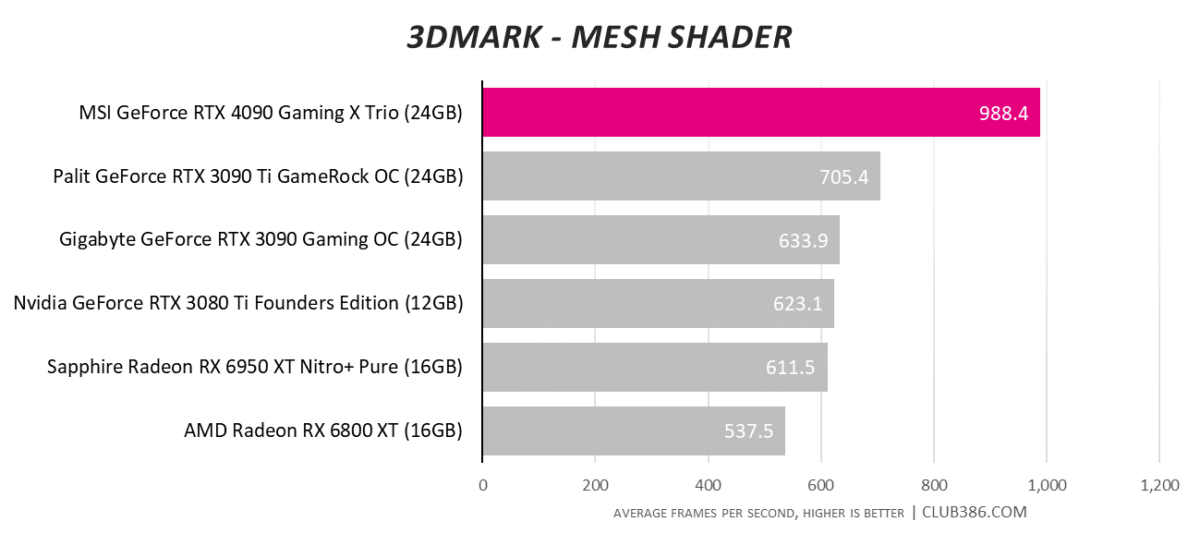

Waiting for raytracing performance to reach an acceptable level? That time has come.

RTX 4090 is in a different league to everything that has come before.

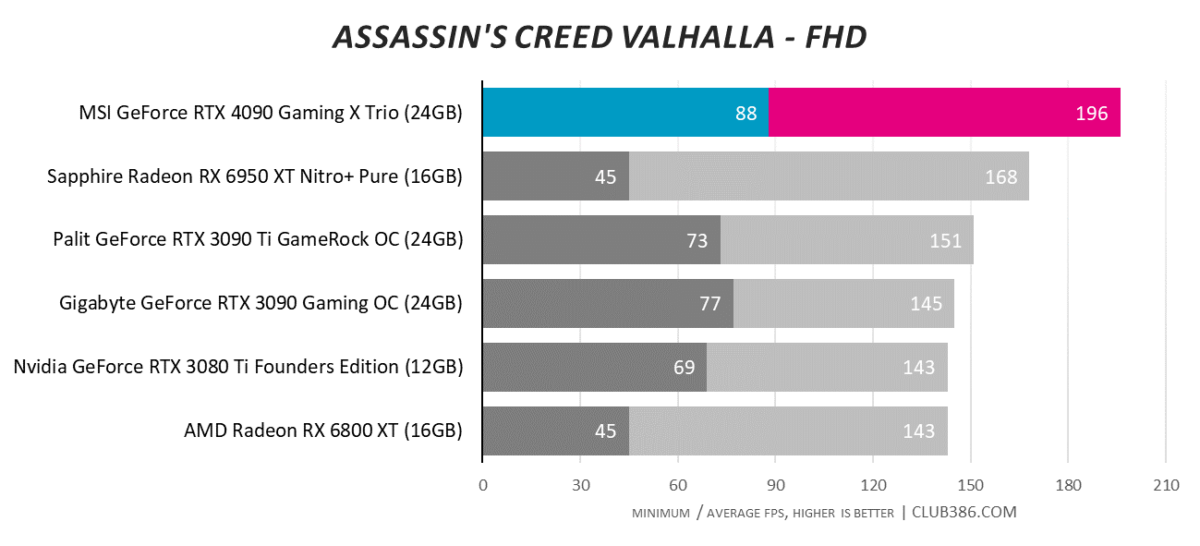

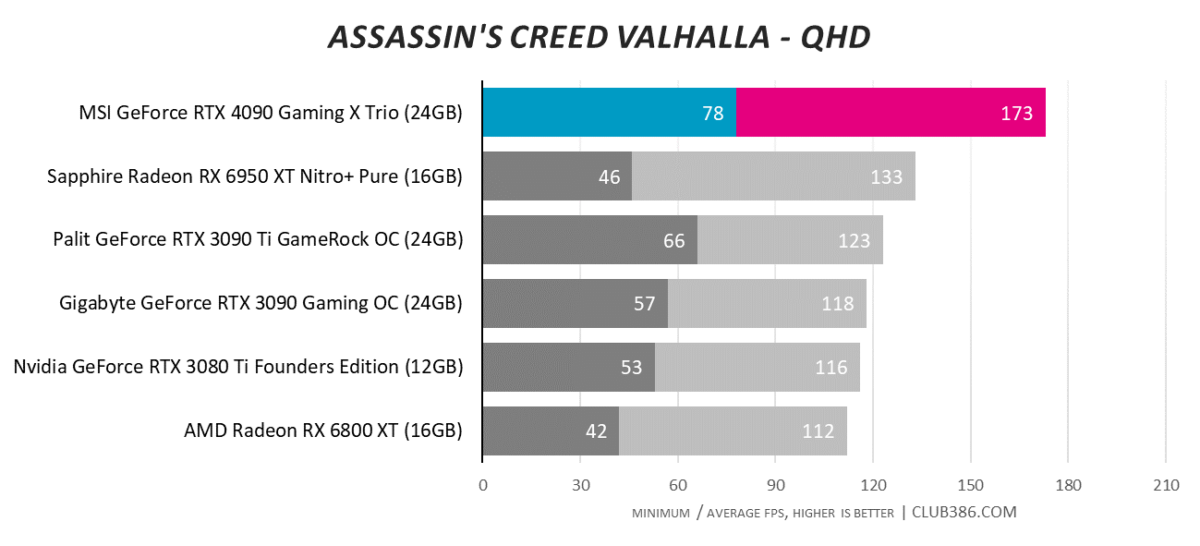

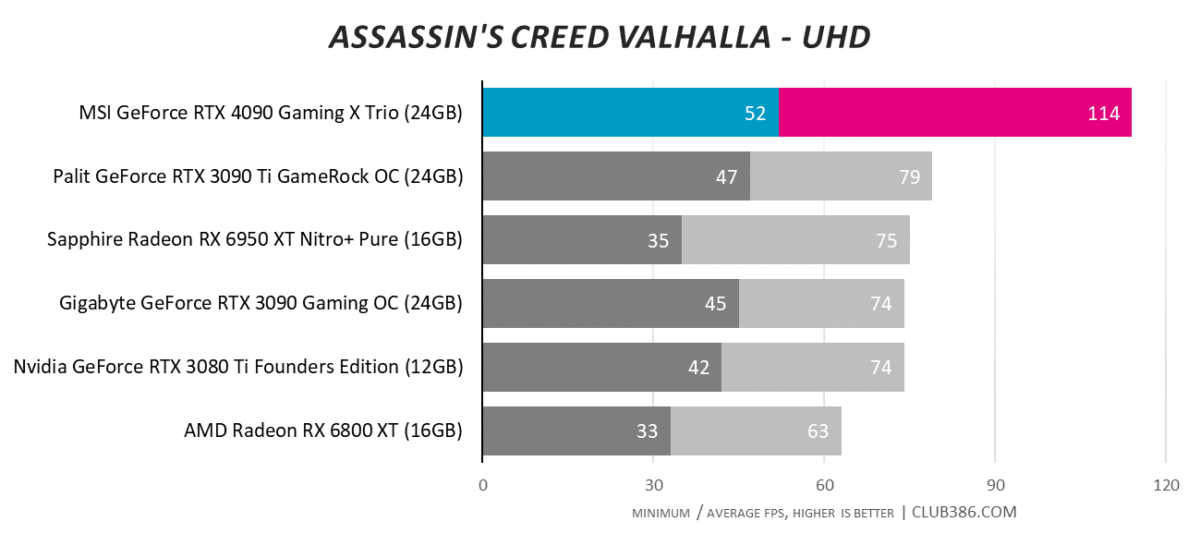

Assassin’s Creed Valhalla

Load up games and RTX 4090’s supremacy remains clear to see. 4K120 TVs are starting to look far more appealing.

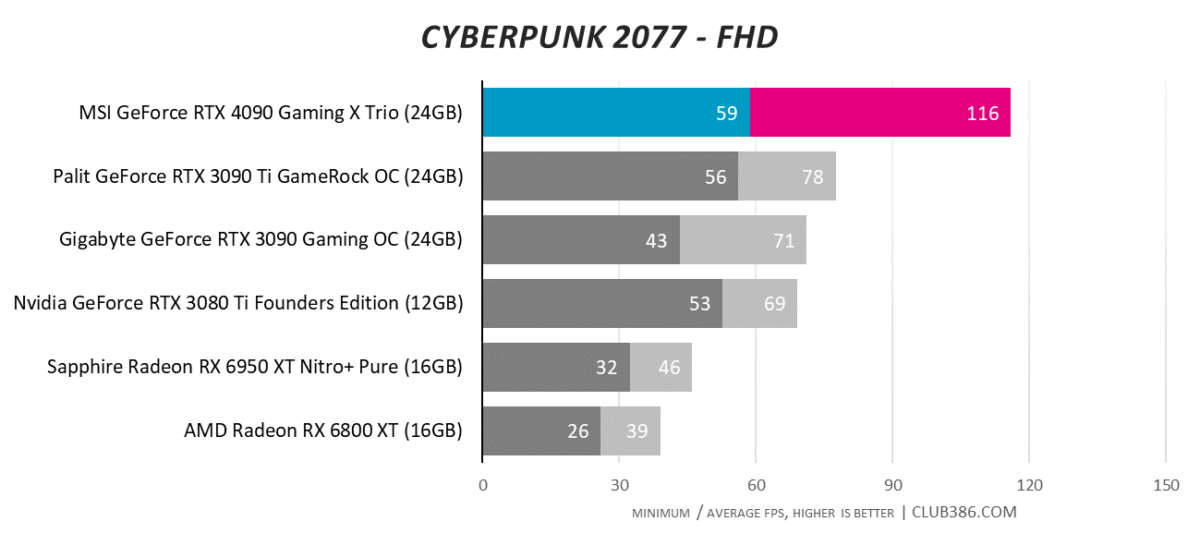

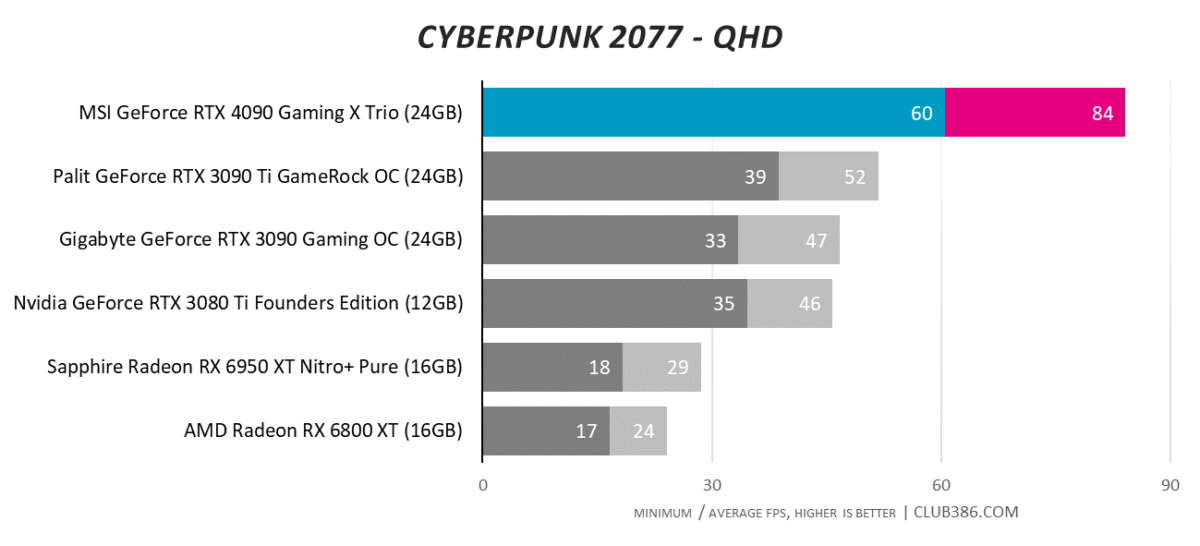

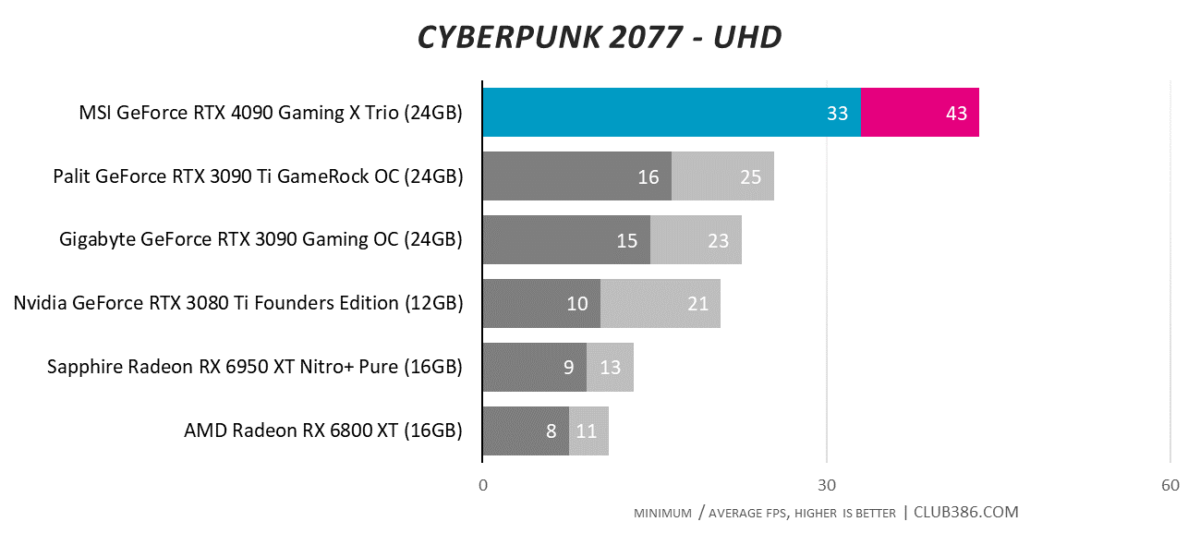

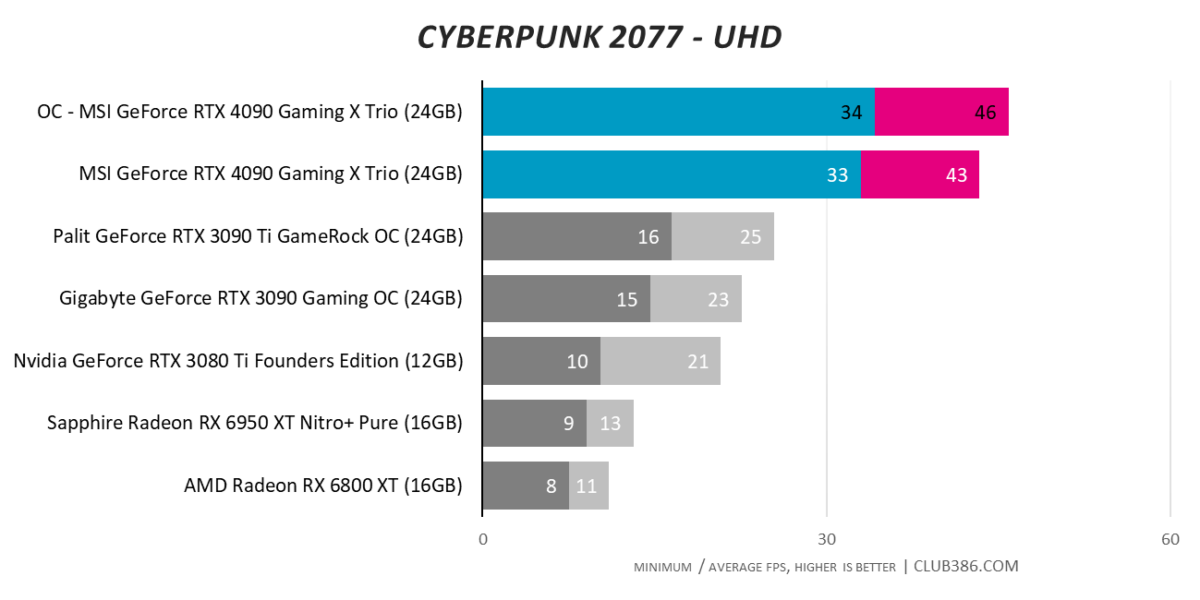

Cyberpunk 2077

We still haven’t hit 4K60 in this extremely taxing title, yet keep reading to see what happens in Cyberpunk 2077 when DLSS 3 comes out to play.

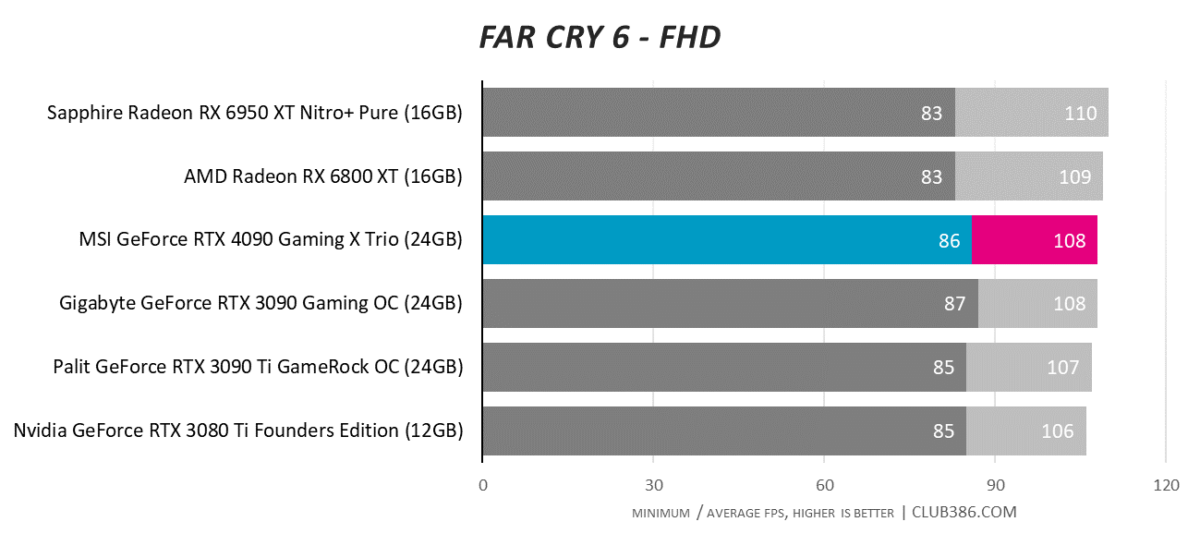

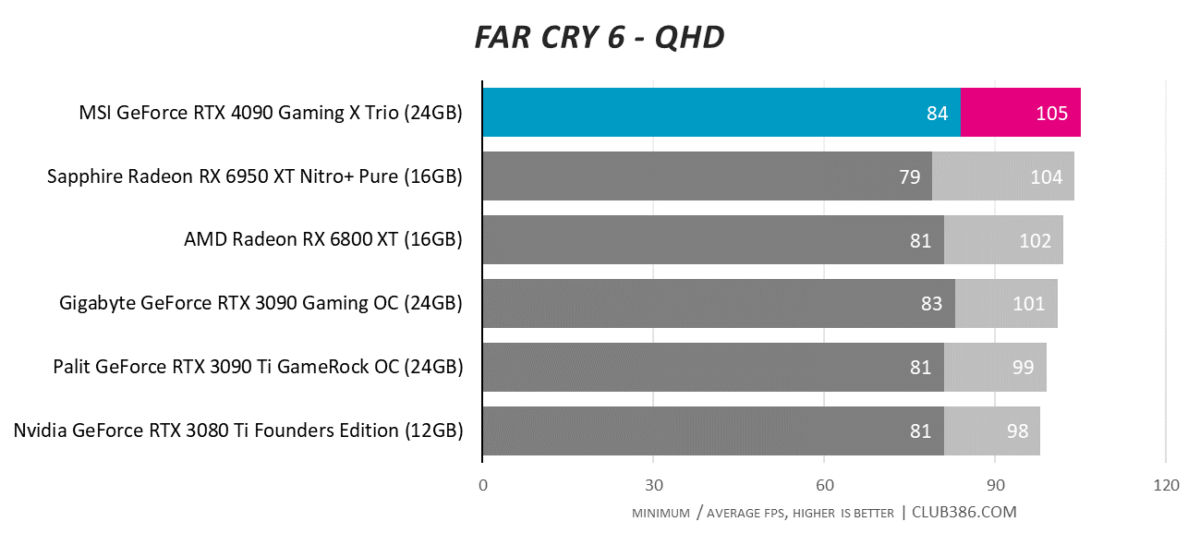

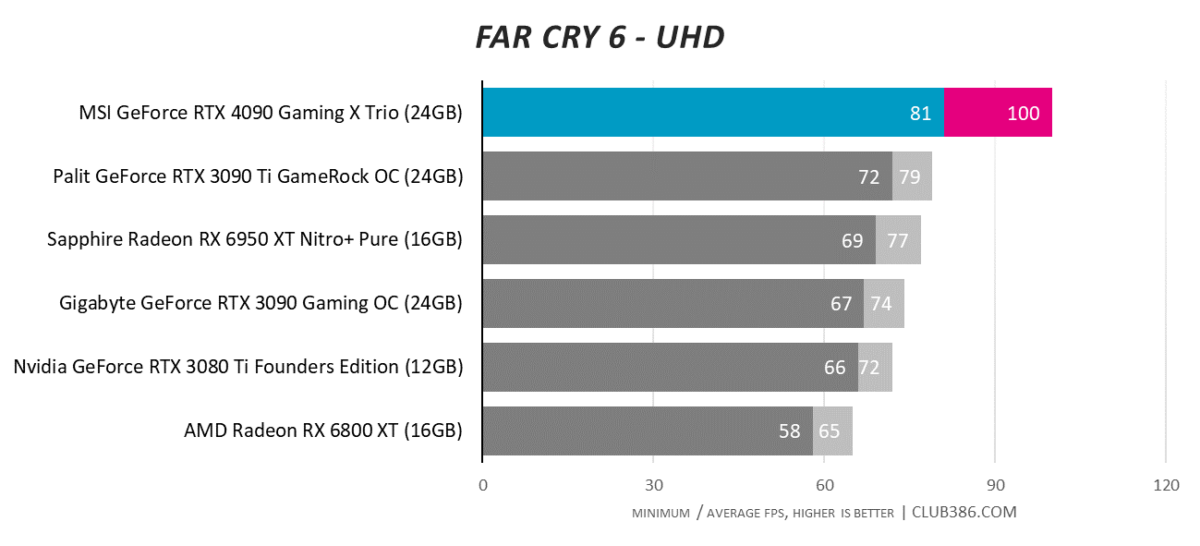

Far Cry 6

Some games won’t show any immediate benefits at lower resolutions. This is a GPU that craves a high-resolution display, and 4K100 is rather lovely with all the raytracing bells and whistles turned on.

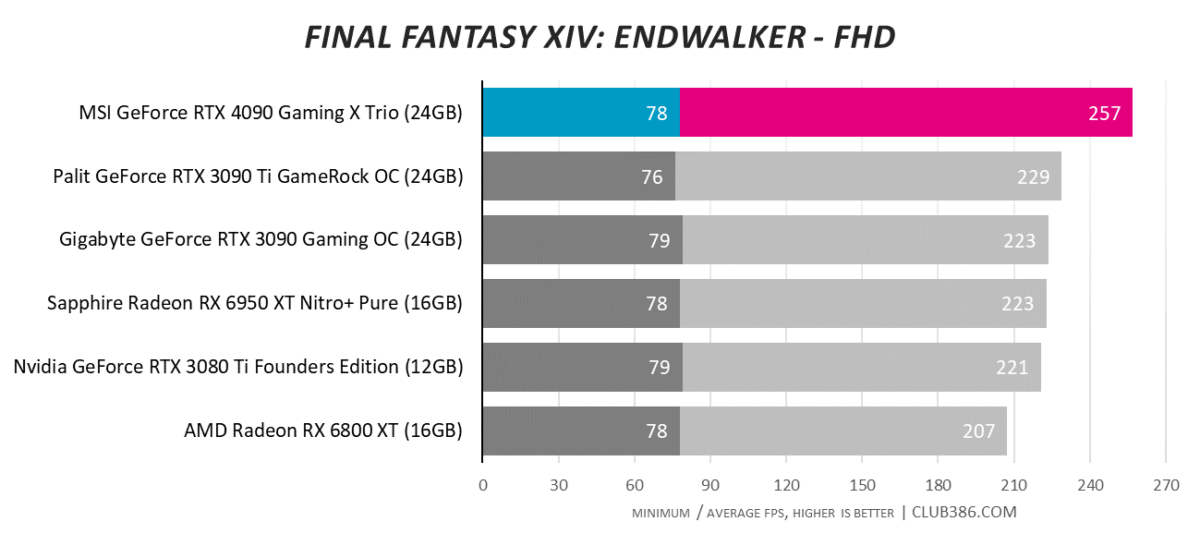

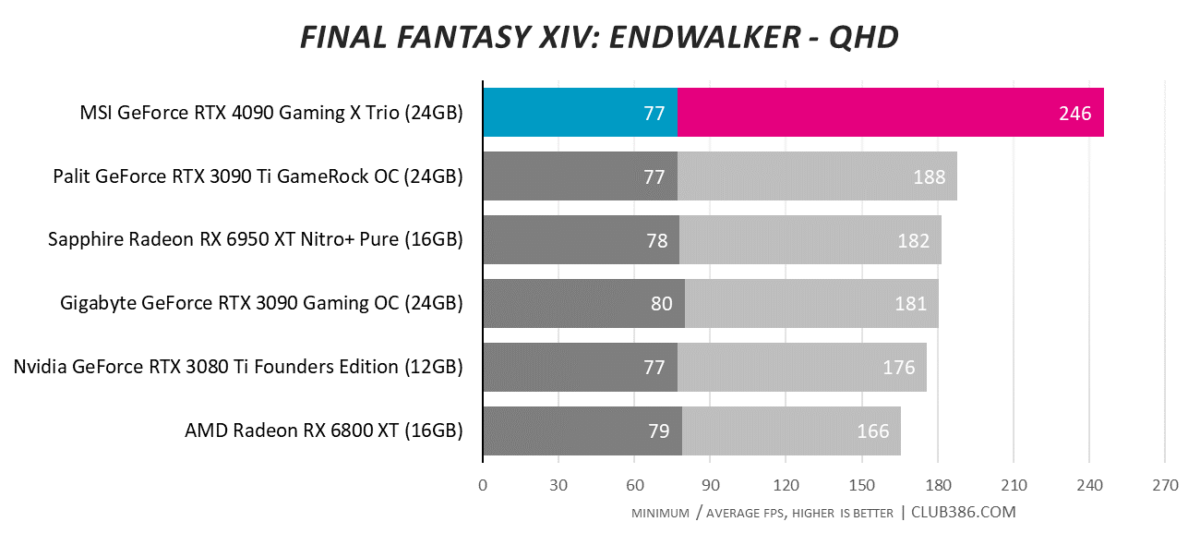

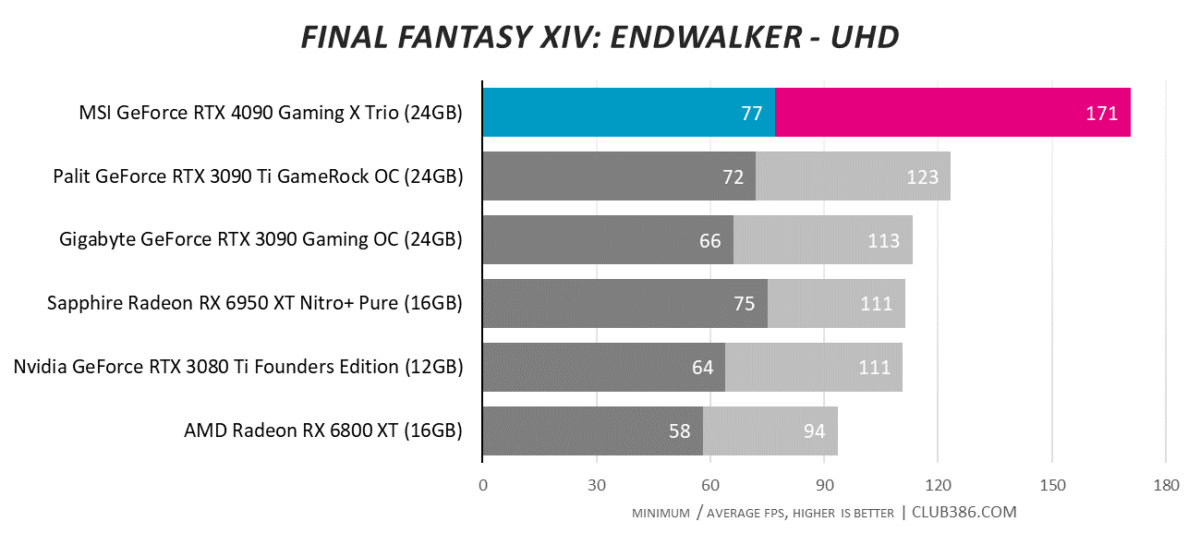

Final Fantasy XIV: Endwalker

Final Fantasy XIV: Endwalker is usually a good sign of a GPU’s rasterisation capabilities. RTX 4090 rules the roost and performance, at times, is so high that developers are having to respond in kind. Fun fact: Nvidia likes to point out that Blizzard raised Overwatch 2’s framerate cap to 600fps in response to RTX 4090 performance.

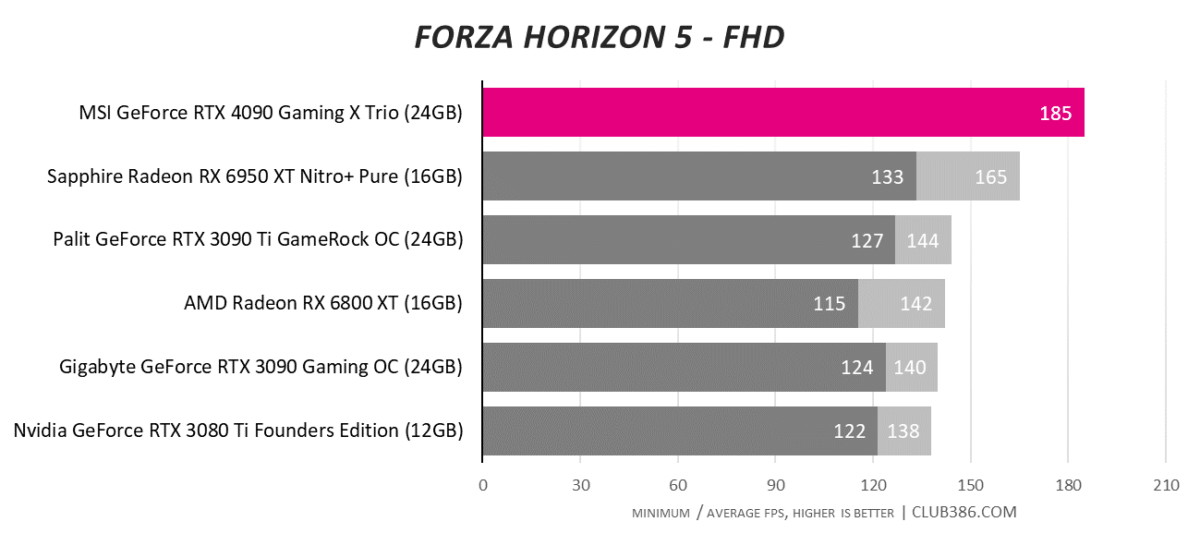

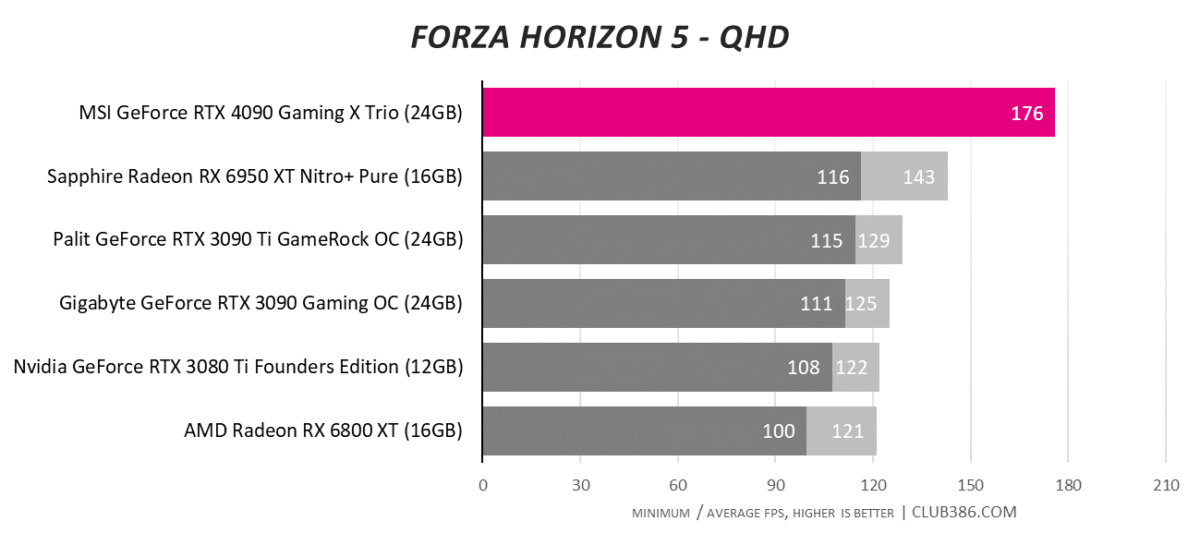

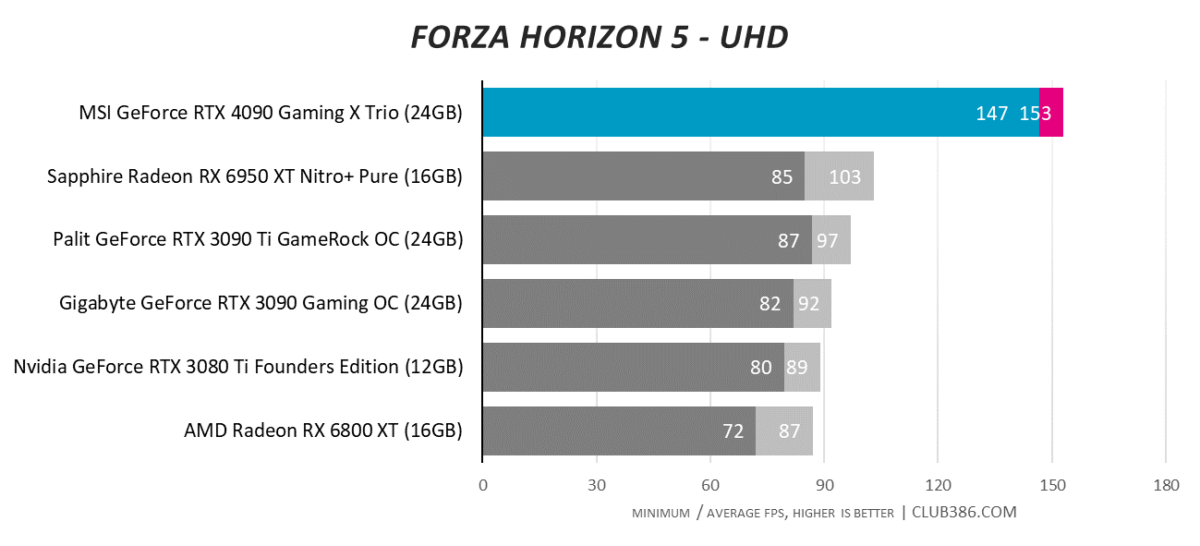

Forza Horizon 5

Wondering why no minimum FPS is charted at FHD or QHD in Forza Horizon 5? The reason is the reported GPU minimum is higher than the in-game average; even a CPU as quick as Ryzen 9 5950X is unable to keep up with the king GeForce. RTX 3090 Ti looks positively timid by comparison.

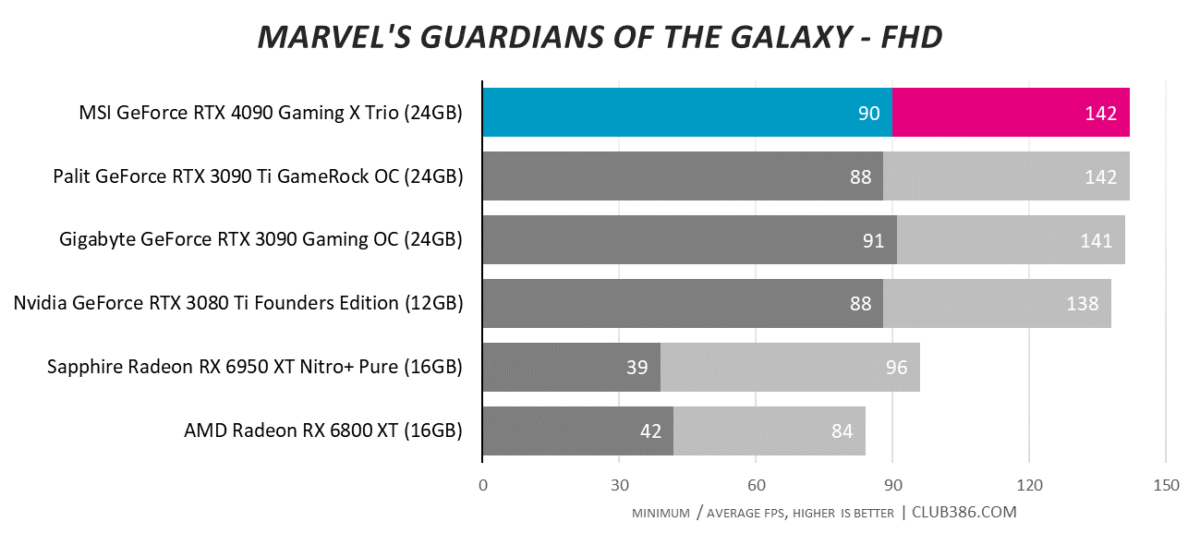

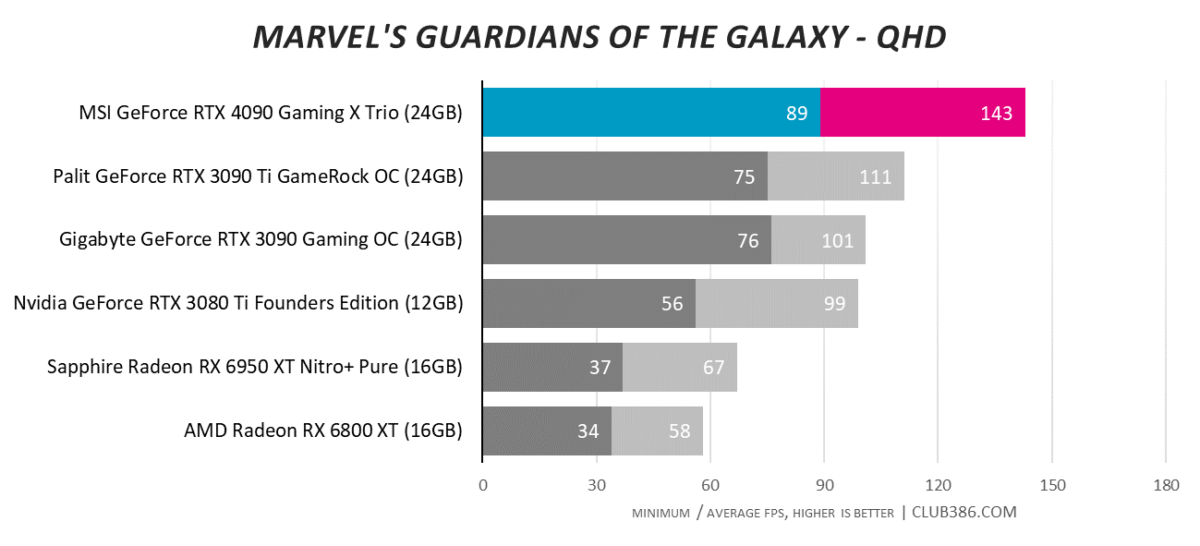

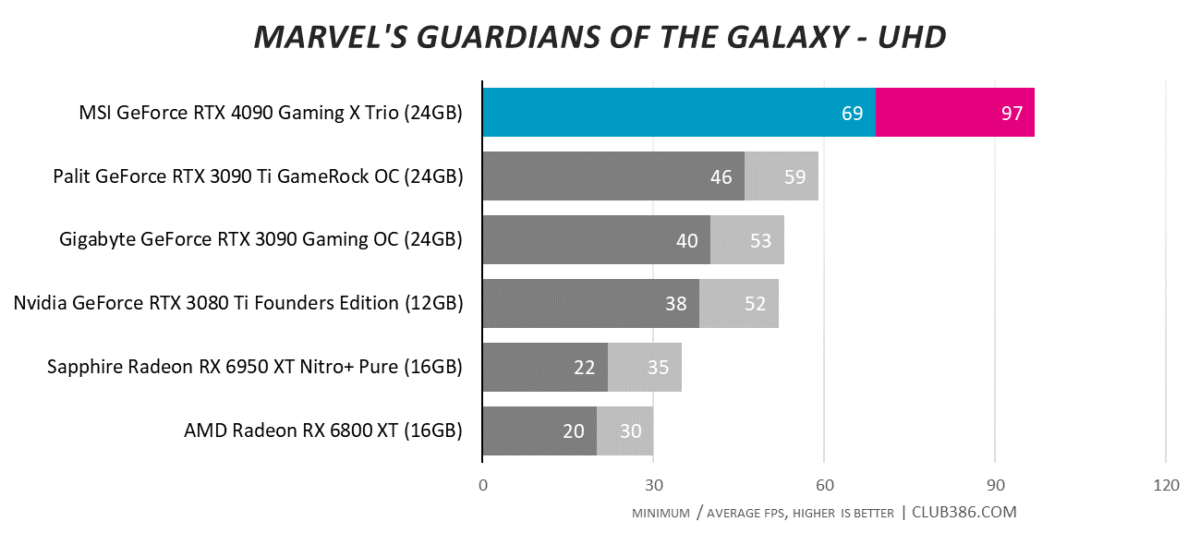

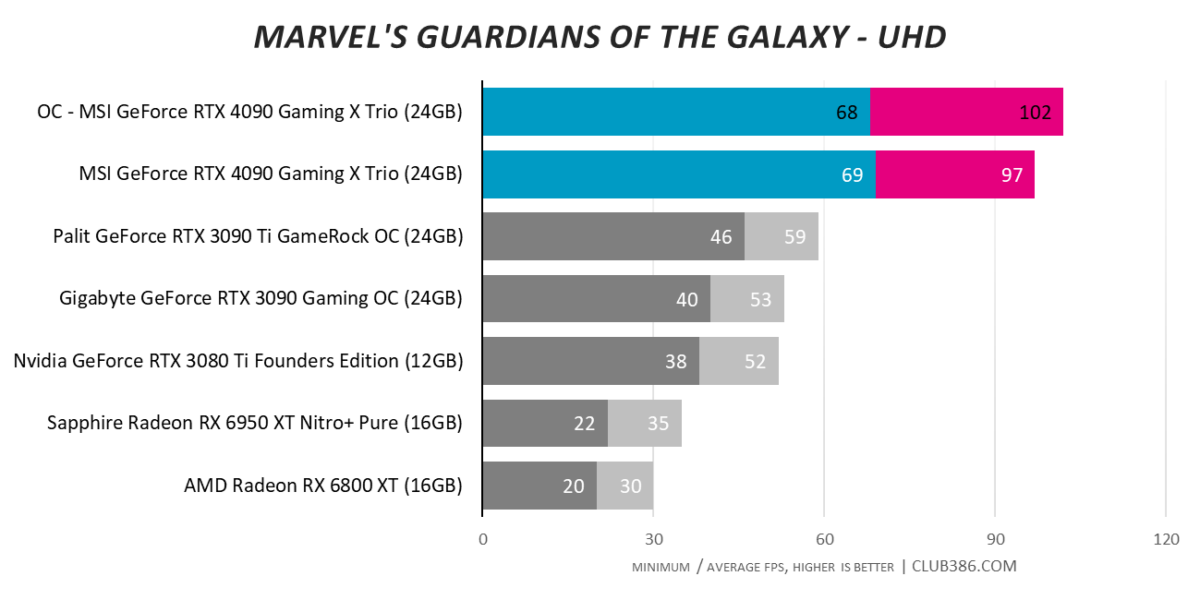

Marvel’s Guardians of the Galaxy

Over 60 per cent quicker than RTX 3090 Ti at a 4K UHD resolution? For gamers demanding the absolute best performance, nothing else comes close to RTX 4090. Can RDNA 3 get within range? We’ll hopefully know by this time next month.

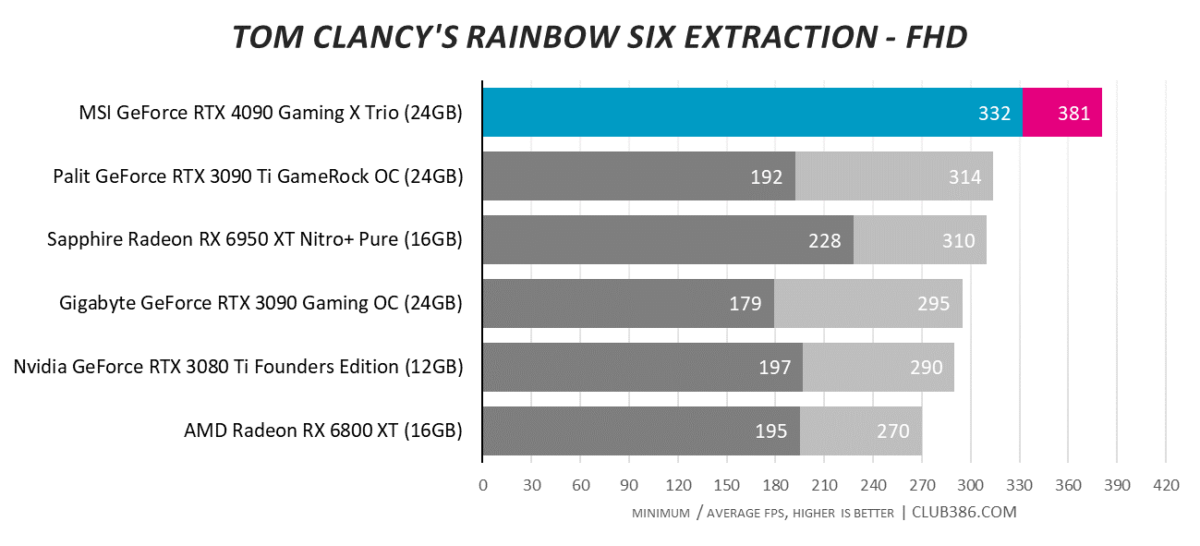

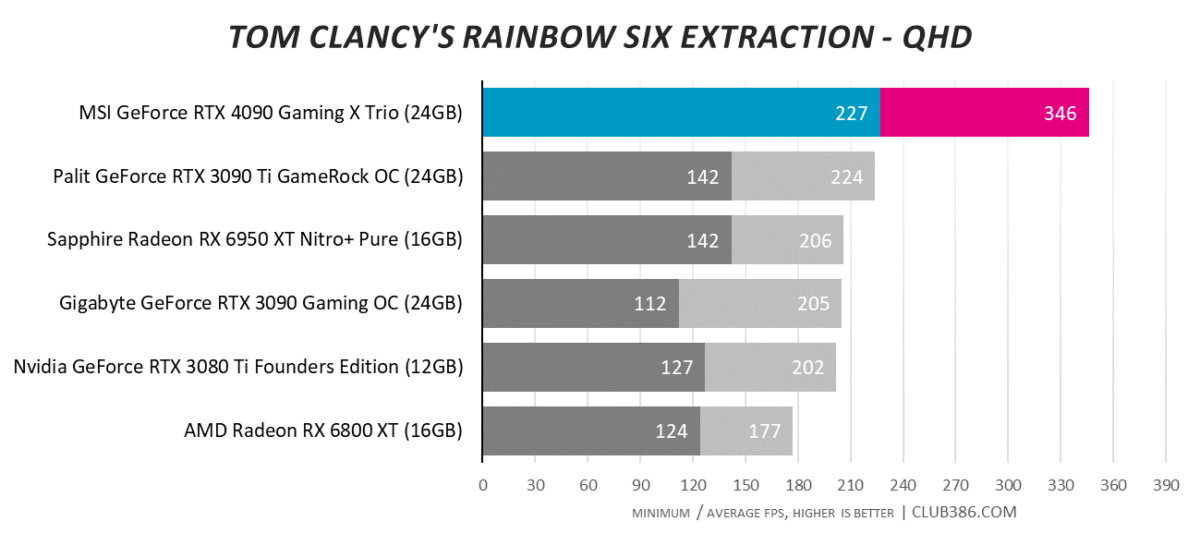

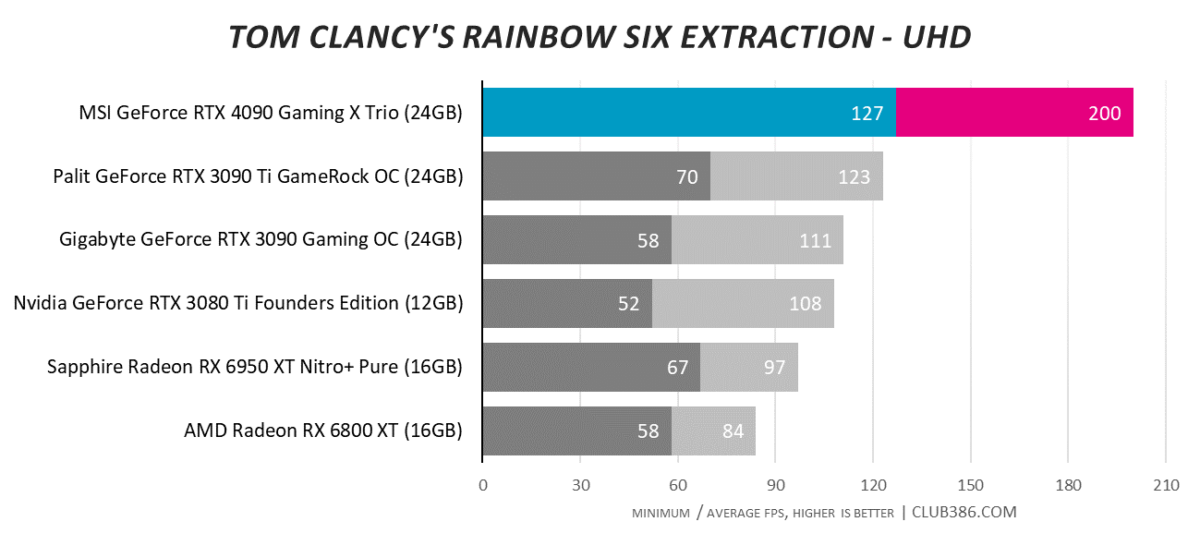

Tom Clancy’s Rainbow Six Extraction

Rainbow Six Extraction best sums up the ridiculous performance potential of RTX 4090, whose minimum frame rate is above the average achieved by every other card. You don’t see that every day. Well, you do if reviewing numerous RTX 4090 GPUs.

Efficiency, Temps and Noise

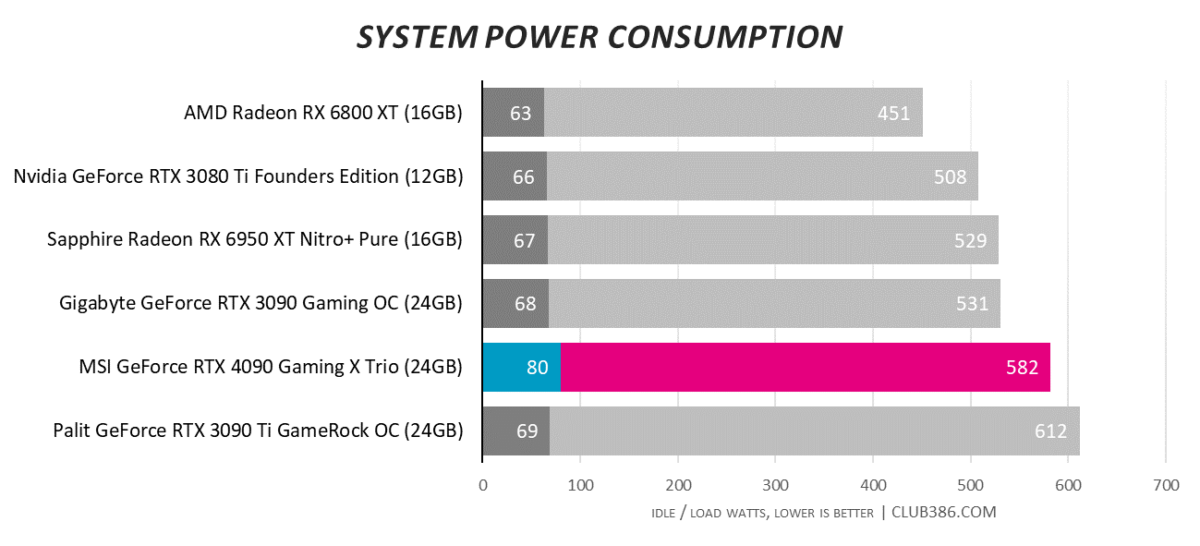

After much speculation, it turns out RTX 4090’s energy efficiency isn’t all that different to last-gen champ, RTX 3090 Ti. In fact, in-game system-wide power consumption is a hair lower, and by playing it safe with regards to frequency, MSI’s Gaming X Trio is the most frugal RTX 4090 we’ve tested thus far. Nearly 600 watts is still considerable in this age of huge electricity costs, of course, but the champ ultimately delivers a big increase in performance-per-watt.

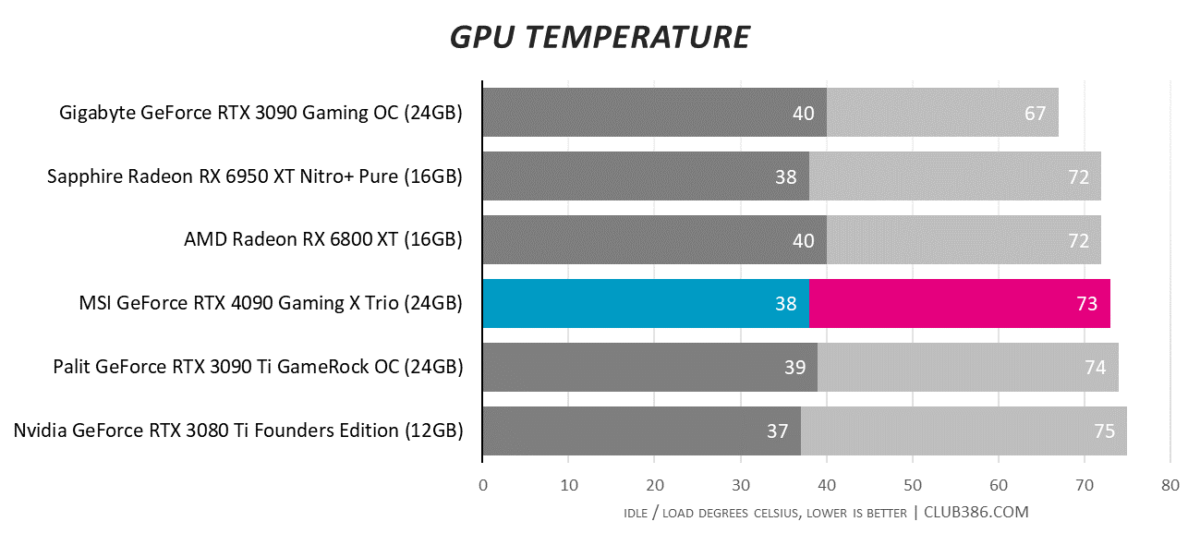

We’ve seen partner cards deliver in-game temperatures in the high 60’s. MSI trades vapour chamber for more traditional cooling, yet Gaming X Trio runs perfectly cool inside our fully-built, real-world test platform.

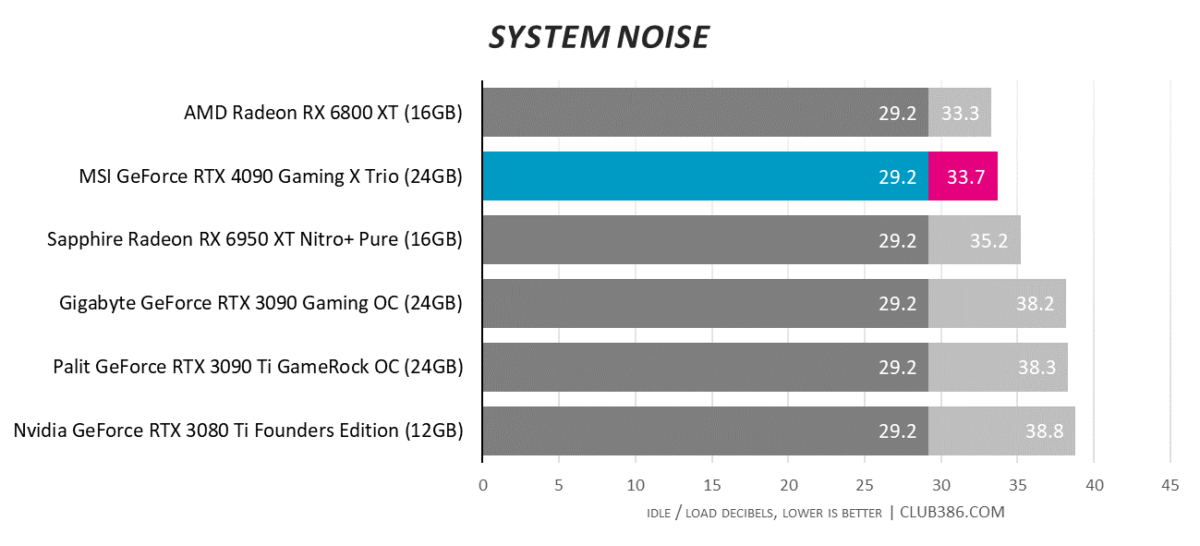

In fact, we’d go as far as to say we’d happily accept temperatures in the low 70s in exchange for ultra-quiet acoustics. We wondered which partner will be first to deliver sub-35dB noise levels while gaming, and MSI has delivered. Considering the amount of raw power beneath the hood, the card is amazingly quiet at all times. We might just have been lucky, but our review sample also exhibits little in the way of coil whine, which is always a bonus.

Overclocking

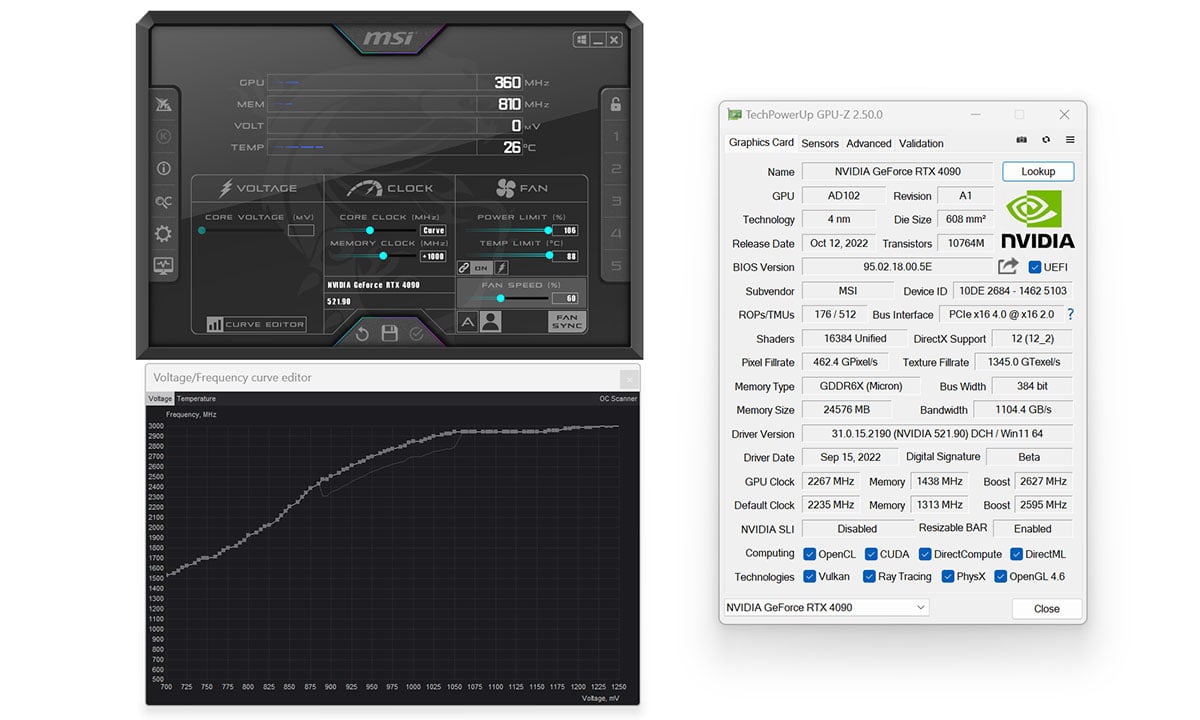

On-paper power limits suggest Gaming X isn’t geared for extreme tinkering – a maximum adjustment of +6 per cent lets the card scale to a maximum 480W, whereas Suprim X reaches 520W – but for the casual overclocker there’s still decent headroom to play with.

Using Afterburner’s automated OC scanner, an optimised frequency curve translates to in-game frequency tickling 2.9GHz, while memory runs happily at an effective 23Gbps.

Would we risk pushing a £2,000 card to higher frequencies in exchange for a few extra frames per second on an already top performer? Absolutely not. We might be inclined to boost performance another way, mind.

DLSS 3

Having spent the past few decades evaluating GPUs in familiar fashion, it is eye-opening to witness a sea change in how graphics performance is both delivered and appraised.

Thunderous rasterisation and lightning RT Cores, yet DLSS 3 is the storm that’s brewing

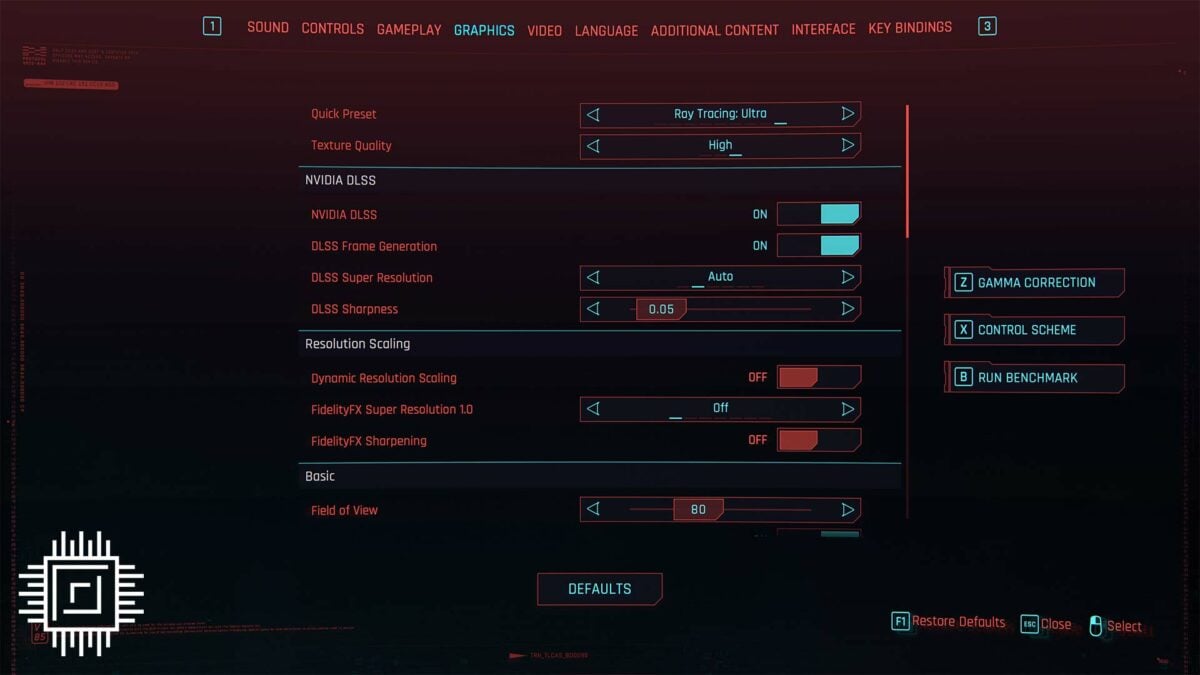

RTX 4090 may boast thunderous rasterisation and lightning RT Cores, yet DLSS 3 is the storm that’s brewing. Rejogging the memory banks after all those benchmarks, remember developers now have the option to enable individual controls for Super Resolution and/or Frame Generation. The former, as you’re no doubt aware, upscales image quality based on setting; Ultra Performance works its magic on a 1280×720 render, Performance on 1920×1080, Balanced works at 2227×1253, and Quality upscales from 2560×1440.

On top of that, Frame Generation inserts a synthesised frame between two rendered, resulting in multiple configuration options. Want the absolute maximum framerate? Switch Super Resolution to Ultra Performance and Frame Generation On, leading to a 720p upscale from which whole frames are also synthesised.

Want to avoid any upscaling but willing to live with frames generated from full-resolution renders? Then turn Super Resolution off and Frame Generation On. Note that enabling the latter automatically invokes Reflex; the latency-reducing tech is mandatory, once again reaffirming the fact that additional processing risks performance in other areas.

Reviewers have been granted access to pre-release versions of select titles incorporating DLSS 3 tech. Initial impressions are that developers are still getting to grips with implementation. Certain games require settings to be enabled in a particular sequence for DLSS 3 to properly enable, while others simply crash when alt-tabbing back to desktop. There are other limitations, too. DLSS 3 is not currently compatible with V-Sync (FreeSync and G-Sync are fine) and Frame Generation only works with DX12.

Point is, while Nvidia reckons uptake is strong – some 35 DLSS 3 games have already been announced – these are early days, and some implementations will work better than others. Cyberpunk 2077, formerly a bug-ridden mess, has evolved dramatically over the months into a much-improved game and one that best showcases what raytracing and DLSS can do.

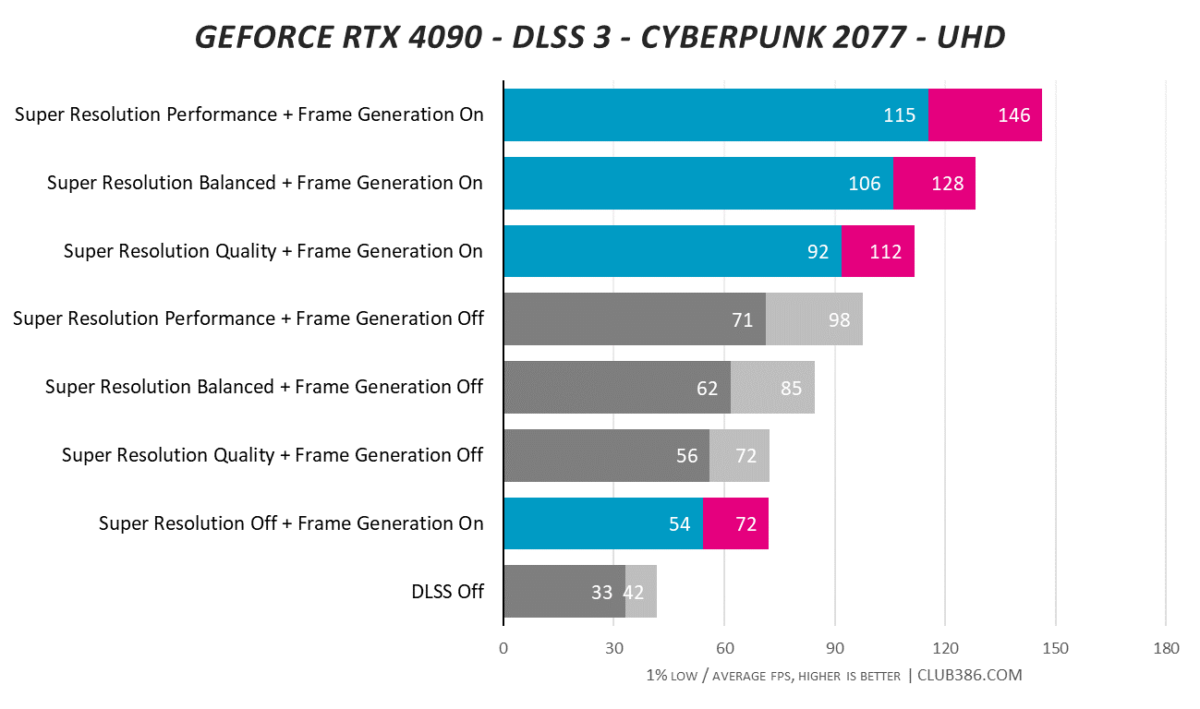

Performance is benchmarked at a 4K UHD resolution with raytracing set to Ultra and DLSS in eight unique configurations (we weren’t kidding when we said gamers will have plenty of options). Putting everything in the hands of rasterisation evidently isn’t enough; 42 frames per second is disappointing.

Turn on Frame Generation, whereby frames are synthesised from the native 4K render, and framerate jumps by 71 per cent, equivalent to Super Resolution at the highest quality setting. Beyond that, performance scales as image quality degrades. Maximum Super Resolution (1440p upscaling) with Frame Generation bumps FPS up to 112, and dropping Super Resolution quality down to Performance (1080p upscaling) sends framerate to 146. This is where Nvidia’s claims of up to a 4x increase in performance become apparent.

DLSS 3 works, the numbers attest to that, but there are so many other questions raised here. Performance purists will naturally lament the fact that not all frames are properly rasterised, and though Nvidia reckons developers are on board, you have to wonder how game artists feel about their creations being artificially amalgamated.

A lot of the answers ultimately revolve around how well DLSS maintains image quality. The pros and cons of Super Resolution are well documented, but Frame Generation is an entirely new beast, and even at this early stage, signs are good.

The screengrabs above, showing a synthesised frame between two renders, suggests Nvidia’s optical flow accelerator is doing a fine of job of reconstructing a frame, though visual artifacts do remain. HUD elements, in particular, tend to confuse DLSS 3 (look for the 150M objective marker as an example), but on the whole it is a surprisingly accurate portrayal.

It is also worth pointing out that we’re having to pore through individual frames just to find artifacts. You may discover the odd missing branch or blurred sign, but inserted frames go by so quickly that errors are practically imperceptible during actual gameplay. On the contrary, what you do notice is how much smoother the experience is with DLSS 3 enabled.

For gamers with high-refresh panels the tech makes good sense, of course, but convincing the e-sports crowd will be easier said than done. That’s an arena where accurate frames matter most.

There’s also latency to take into consideration. In the above tests, average latency was recorded as 85ms with DLSS turned off completely. Latency drops to 31ms with Super Resolution enabled to Quality mode, but then rises to 45ms with both Super Resolution and Frame Generation working in tandem.

Plenty of food for thought. DLSS 3 examination will continue in earnest with the release of compatible retail games. For now, consider us intrigued and a little bit optimistic.

Conclusion

Semiconductor shortages may be easing, but demand for the very best hardware evidently has not. The sheer might of RTX 4090 has swayed those who were inclined to sit on the fence, and in the week following launch, stock has vanished rapidly from store shelves.

Nvidia and its partners need to help eradicate scalping in an effort to get cards into the hands of actual enthusiasts. We suspect it’ll be a drawn-out process, yet when the dust eventually settles, MSI’s GeForce RTX 4090 Gaming X Trio delivers exactly what you might expect. A hulking take on a fierce GPU, it promises true 4K120 gaming potential in an oversized form factor designed to run cool and quiet at all times.

The biggest Ada Lovelace card we’ve seen thus far is also by some distance the quietest, making it a fine prospect for those who favour a serene gaming experience. There are prettier cards out there, though it’s a case of where priorities lie; some offer lavish overclocking potential, others favour dazzling RGB – Gaming X Trio keeps things muted.

Verdict: Ripping up benchmarks without breaking a sweat, Gaming X Trio is a fine implementation of an extreme GPU.