After years of speculation and months of hype, Intel is finally ready to play in the discrete graphics card market. Today, Team Blue formally announces the Arc family of graphics processors, starting off with Arc 3, Arc 5 and Arc 7 primed for thin-and-light and gaming notebooks.

Codenamed Alchemist, Intel’s decision to use Arc dGPU technology for mobile first speaks volumes, literally, about market opportunity. Arc 3 is available in select laptops right now, while Arc 5 and Arc 7 will follow in the summer. Let’s examine Arc from a top-down approach.

Arc 3 available now

Entry-level Arc 3 is designed to offer casual gamers the next performance step up from integrated graphics. Available in two flavours differentiated by tweaks to specifications and power, Arc A350M and A370M ply the popular 25-50W TDP territory.

Both are built from the same base TSMC 6nm silicon and carry a common feature set, which is a theme that spills over to all Arc GPUs. If an application or game runs on one, it runs on them all, albeit more slowly for lower-end models.

This strategy is most curious with respect to hardware-based raytracing, available on even the A350M, and going by the lacklustre performance manifested by entry-level Radeon and GeForce cards with these baked-in accurate lighting smarts, we don’t expect much from Arc 3’s six- and eight-unit complement. A tickbox exercise more than real-world usefulness? The jury is in deliberation.

Zooming out, all Arc mobile GPUs use one of two SoCs known as ACM-G10 and ACM-G11. It’s clear A350M and A370M harness the smaller, leaner G11 silicon, which is capped at eight Xe cores and a 96-bit memory bus; all others run off the substantially bigger G10, which is up to four times as powerful.

Speaking of sizes, G10 is reckoned to measure 406mm² and occupy 21.7bn transistors; G11 is much smaller, coming in at 157mm² and 7.2bn transistors.

Specifications

Frequencies seem low on first glance, operating from 900MHz through to 1,650MHz. Intel says this is deliberate and shouldn’t be compared to rivals’ specifications. Why? Because Arc frequency is set by the heaviest workload and lowest TDP for that class of GPU. For example, A370M ought to run the most complex, shader- and ray tracing-heavy game at a guaranteed 1,550MHz and 35W TDP. It’s highly probable the actual clock will be much higher, so Team Blue wants to underpromise and overdeliver.

Intel cuts the PCIe 4.0 bandwidth in half for G11, to x8, and that’s common in the industry for cheaper GPUs. On a positive note, however, the media engine, which we’ll come to later, is preserved intact across all Arc products. Intel believes it has class-leading technology and performance on this front.

Xe HPG microarchitecture

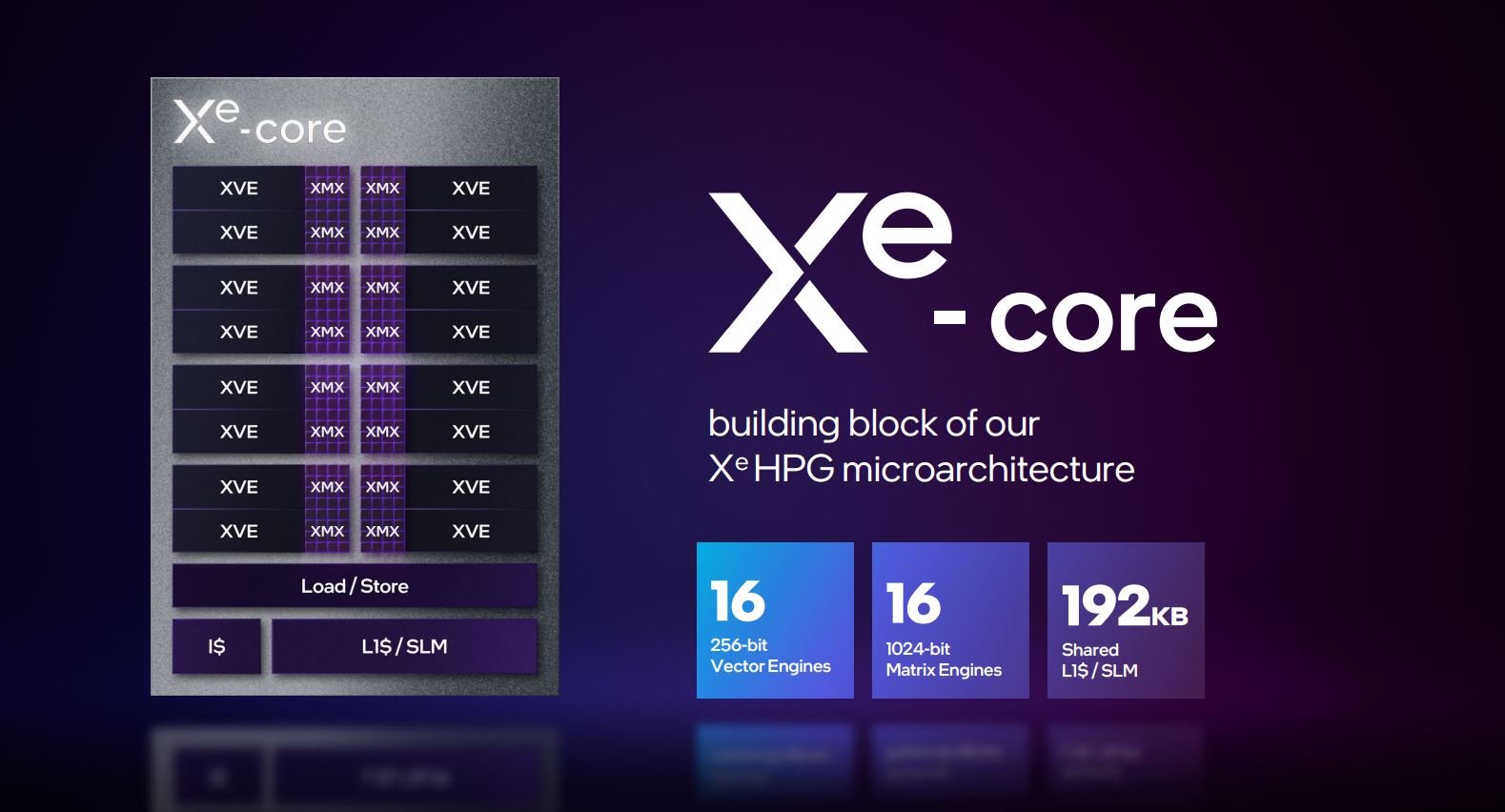

Analogous to Nvidia’s Streaming Multiprocessors and AMD’s Compute Units, Intel lays the foundation of Arc’s power through the Xe HPG microarchitecture contained within Xe cores and, subsequently, Xe Render Slices. These were announced at Architecture Day 2021 but are worth a recap.

The Render Slice is an aggregation of technologies used as the backbone of every Arc GPU. One Slice is home to four Xe cores used for rasterisation and four ray tracing units present to calculate ray traversal and triangle intersection. Eight of these Slices combine to build out the 32-core Arc 7 A770M, for example. This means larger Arc GPUs feature linear increases in all key computational blocks. Go back up a couple of slides and you’ll note that entry-level A350M, complete with six Xe cores, actually takes in two Render Slices, likely with one core deactivated in each.

Each Xe core is itself composed of 16 256-bit-wide SIMD vector engines (or EUs) for handling rasterisation. Highlighted in purple are 16 matrix engines (XMX) optimised for processing emerging AI tasks. Like Nvidia’s Tensor Cores, these engines are effectively giant matrix-multiply calculators.

There’s a shared 192KB pool of memory per Xe core that’s dynamically configured by the driver as either standard L1 cache or shared local memory. If a lot of the core seems familiar, it is, as Intel uses the learnings from previous-generation Xe LP – powering the latest integrated graphics – and massages the best portions into Xe HPG whilst adding modern technologies. Intel claims a 1.5x performance per watt advantage over its own IGP, but how much of that is applicable to process and how much to microarchitecture advancements is unknown.

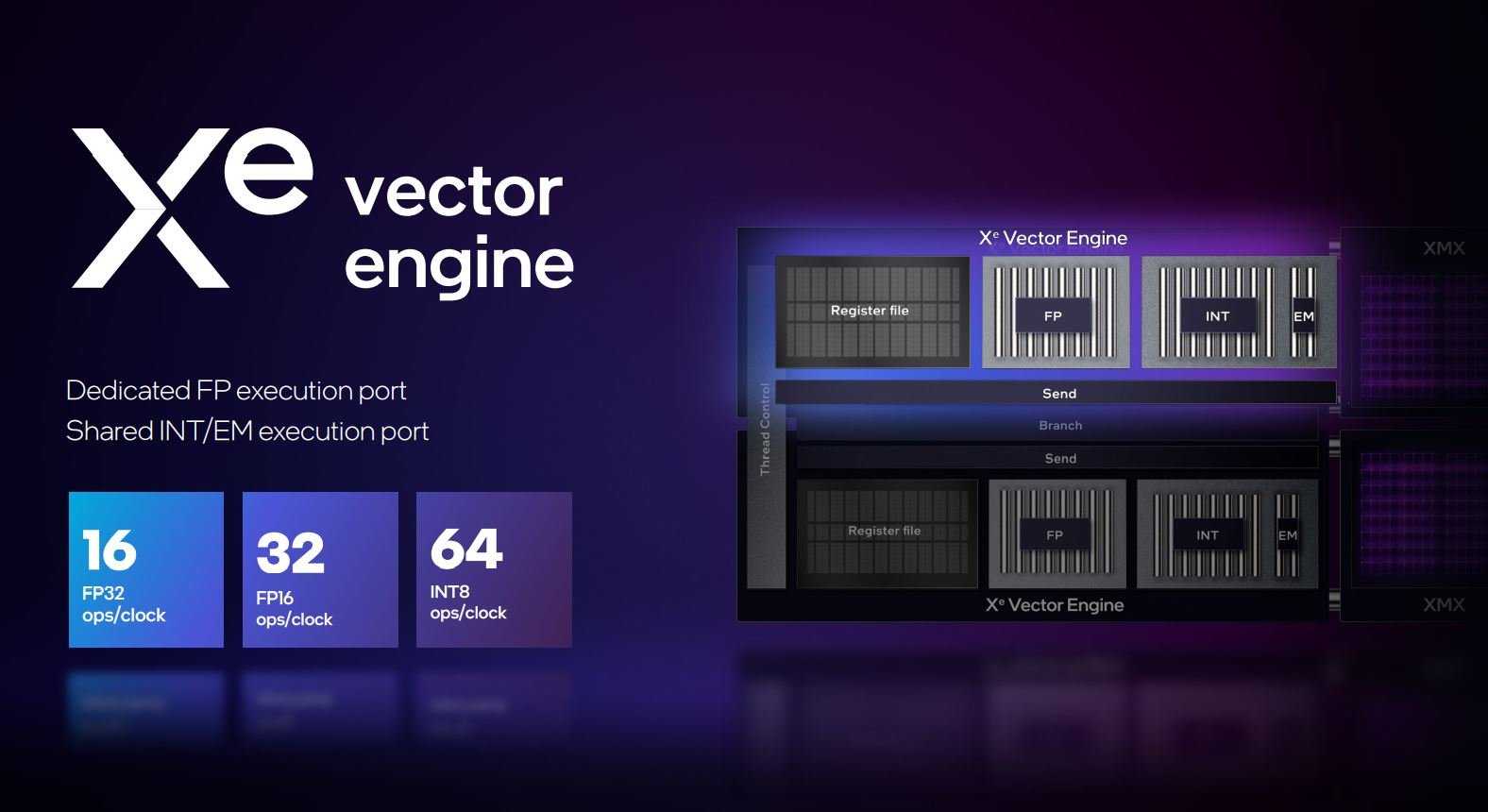

Going one deeper, each Xe vector engine – remember, these are engines, not standalone cores – now has a dedicated floating-point execution port, and like Nvidia’s recent designs, floating point can run alongside integer instructions, keeping the cores busy as the mix of instruction types within gaming code becomes more equal.

Each vector engine itself is home to eight ALUs, or shaders in the traditional sense, so if you do all the math, A350M has 6 Xe cores containing 16 vector engines containing 8 ALUs, so 768 in sum. A770M, meanwhile has 32 Xe cores, or 4,096 shaders (32x16x8).

Peering into one of the 16 XMX engines contained within each core shows it has four times the throughput of the vector engine. Usually run in concert with lower-precision data for AI computation, typically 8-bit, XMX pushes through 256 operations per clock. If you only take away one aspect from this discussion, then let it be this: Intel is allocating a reasonable chunk of silicon real estate to specialised processors for AI, underscoring where it thinks gaming and general GPU workloads are heading. GPU architects only invest in hardware when the requirement is obvious and impossible to ignore.

It’s important to understand vector and XMX engines don’t exist in isolation. Sharing of resources is key in keeping die size as small as possible and throughput high, and all three types of instructions – floating point, integer and XMX – can be issued at the same time.

Why all this focus on AI? First fruit of these integrated engines is Xe Super Sampling, which is similar to Nvidia’s DLSS technology in scope and ambition. Rendering a lower-resolution image and filling in missing fidelity blanks via Xe SS enables a pseudo higher-resolution output at elevated frame rates by using the power of AI.

Understand this is not an algorithm filter that can be applied to any game; Xe SS needs to be implemented on an engine-by-engine basis by the developer, and they’ll have a choice of using either the standard XMS version or performance-tuned DP4a instruction set. We wonder at their enthusiasm at having to potentially integrate both DLSS and Xe SS into one game, especially for triple-A titles that already have DLSS in the works. There’s little doubt that keen developer relations is key for Xe SS’s future.

Modern multimedia engine

Intel claims to have an industry-leading media engine baked into each Arc GPU. Of particular prominence is hardware encoding of AV1, which is a first. Makes sense on mobile for creators looking to package up their videos with the smallest file sizes and highest quality.

The engine block supports HDMI 2.0b, DisplayPort 1.4a (2.0-ready) and outputs to four displays at up to 4K120 HDR, two displays at 8K60 HDR, and refresh rates of 1440p360. Good luck with getting that high on laptop GPUs, but it’s good to see the capability, nonetheless. What’s strange is the inclusion of HDMI 2.0b instead of 2.1; we can’t imagine why Intel has done this.

Arc GPUs carry Vesa adaptive framerate technology and go a step further with an in-house technology known as Speed Sync. Optimised for ultra-high framerate eSports games and run with V-sync switched off, the smarts intelligently hide the very latest frames from the game engine and instruct the display to only show the most recent completed frames. The purpose of this hiding is to ensure there’s no tearing at, say 240Hz-plus. Difficult to get your head around without testing, it’s one feature we’re eager to learn more about.

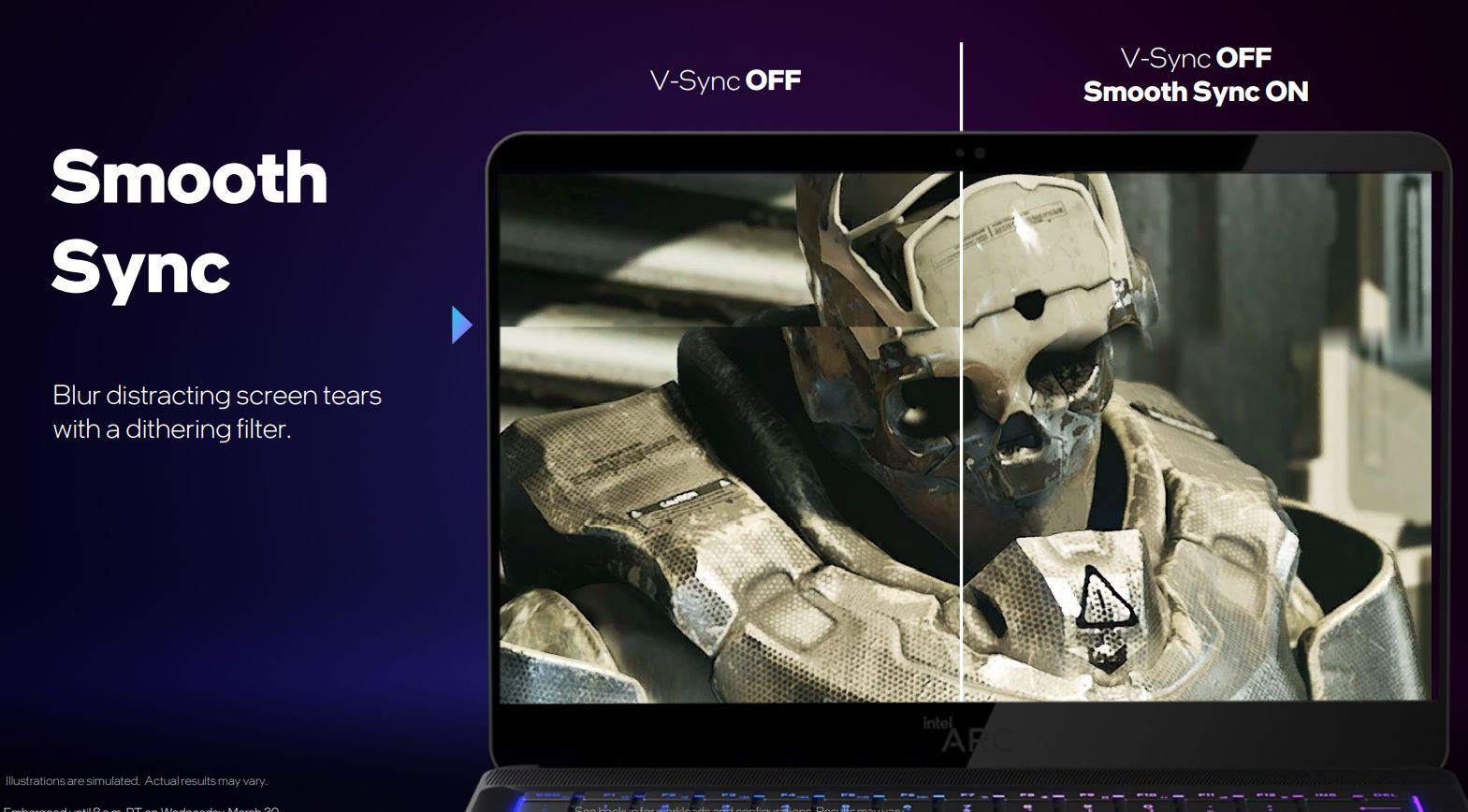

Smooth Sync, meanwhile, does something a bit different. Available on all Arc GPUs, it works by smoothing out visible tearing by blending 32 scan lines at the intersection of two frames. It doesn’t get rid of tearing, of course, but smooths it out so it’s less obvious to the eye. Why do this when adaptive sync gets rid of tearing altogether? Intel says Smooth Sync is useful for entry-level laptops and displays where adaptive sync is not available from the panel.

Performance

Carrying all the goodies emanating from DirectX 12 Ultimate – ray tracing, variable rate shading, mesh shading and sampler feedback – ensures Arc is thoroughly modern.

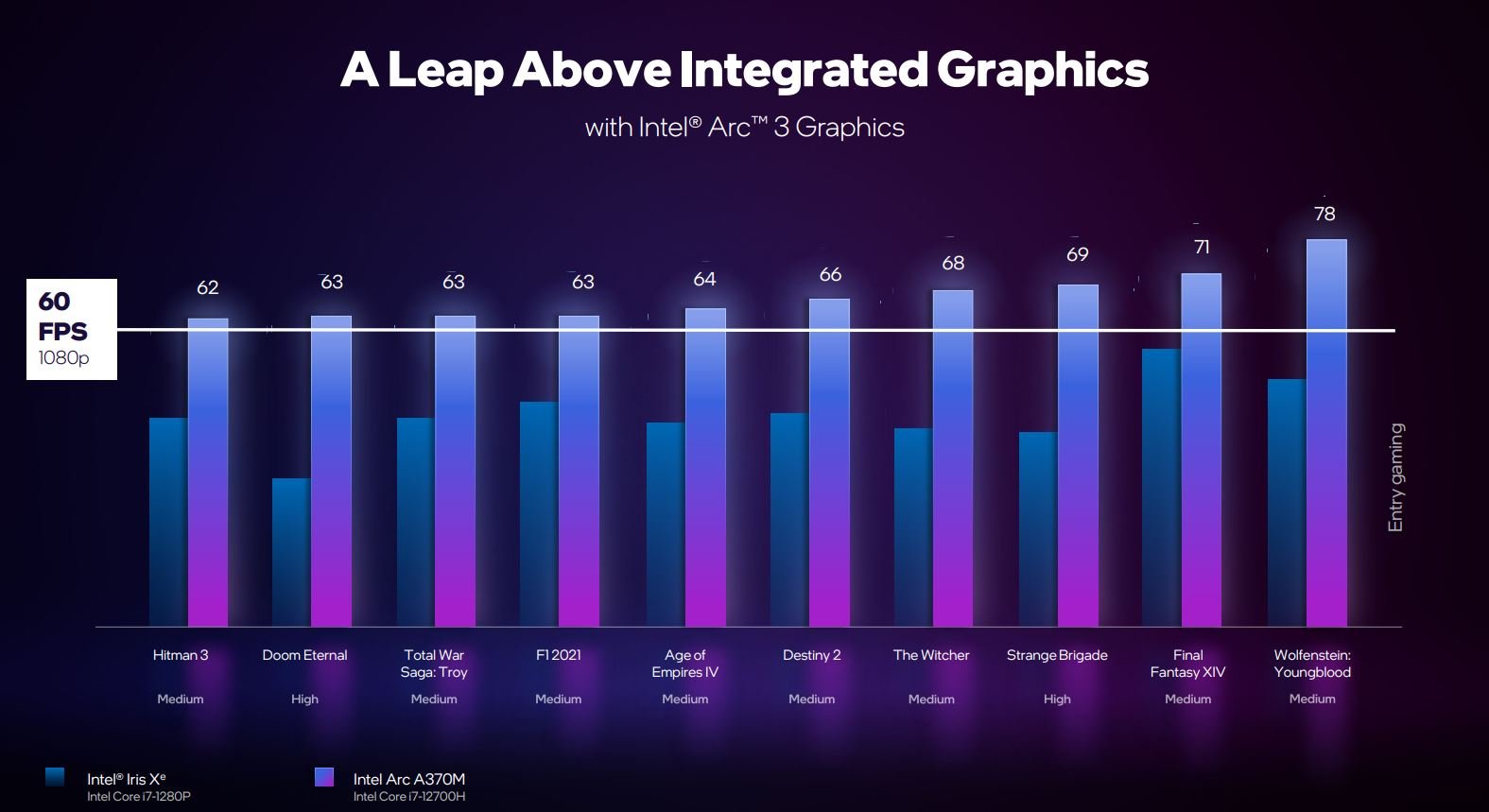

Intel has only compared performance of the Arc A370M against its own integrated Iris Xe graphics contained within a Core i7-1280P chip. That’s not overly helpful; comparisons against lowest-rung discrete Nvidia and AMD mobile GPUs would have been far more instructive and beneficial.

Nevertheless, Arc A370M, operating within a 35-50W range and therefore suitable for thin-and-light laptops, offers about two times the performance in a range of popular and heavy-hitting games. 1080p60 is achieved, but we don’t know the exact settings and quality levels used. Going by these numbers, Arc A350M will barely be any faster than premium integrated graphics, and exactly how it compares against the RDNA 2-powered Ryzen 6000 Series mobile is completely unknown. Arc is also faster for creator workloads, says Intel, and duly trots out slides illustrating this fact.

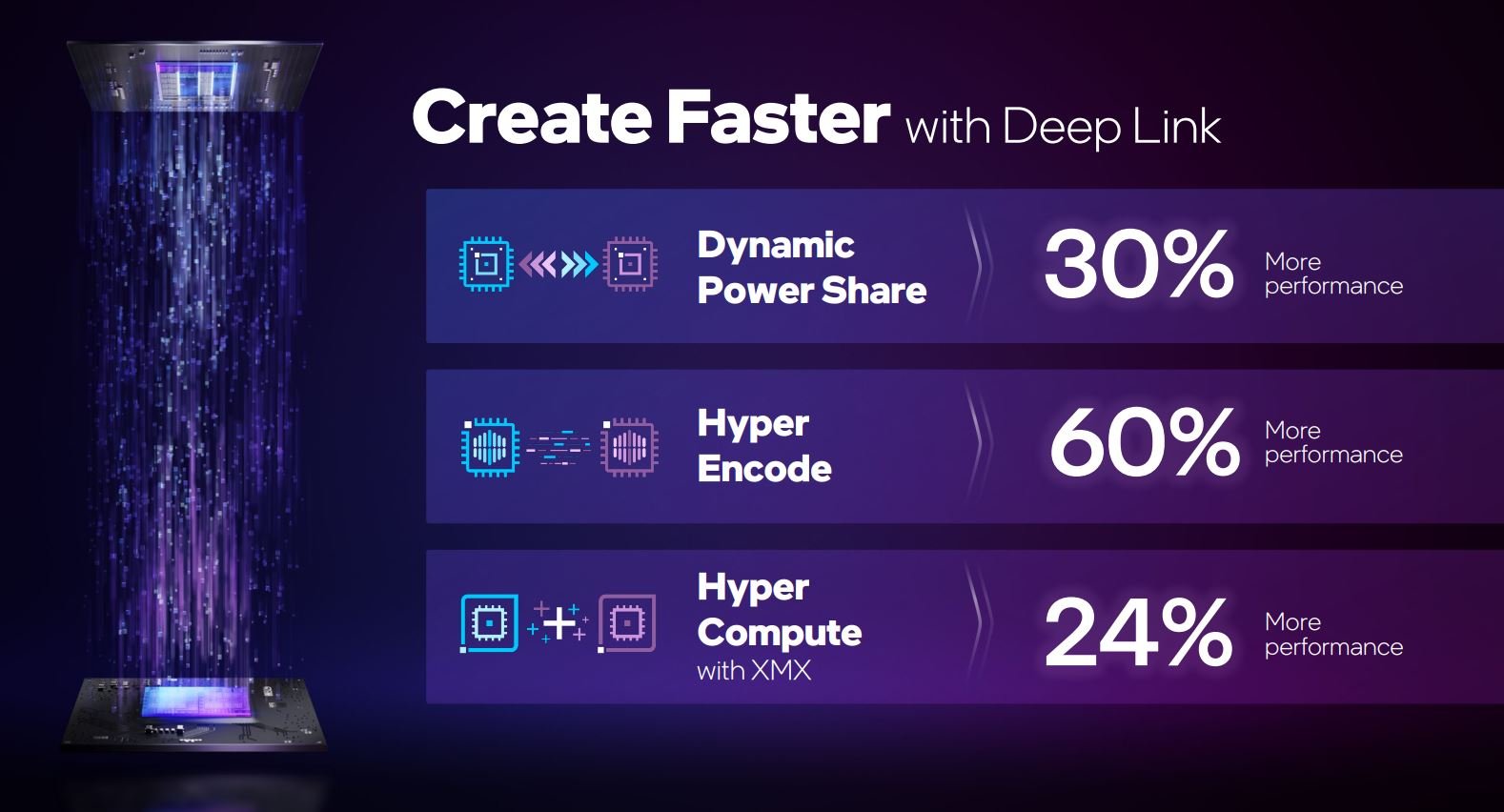

Maximising power where it’s needed most, all 12th Gen Core and Arc notebooks carry what the firm calls Deep Link technology. In a nutshell, it dynamically increases either GPU or CPU power depending upon workload – AMD SmartShift, anyone? – and can result in an extra 20-30 per cent performance boost over running both processors in their own power silos.

An interesting feature of combining 12th Gen mobile integrated graphics with Arc discrete GPUs is Hyper Encode. By splitting a video stream into 15-30-frame blocks and allocating work to either the CPU’s IGP or GPU’s engines, Intel says it can boost performance by a meaningful amount. Think of this as multi-GPU for video, and it works best if both IGP and dGPU are evenly matched. The same combination strategy applies to compute workloads, as well, under the auspice of Hyper Compute.

Models

The first 12th Gen Core and Intel Arc-powered laptops are available now, starting at $899. That sum brings the Arc 3 discrete GPUs into play. Expect a bevy of other announcements in the next few weeks; Intel reckons on-shelf date later in April for multiple models.

The Wrap

Coming to laptop first, Intel Arc discrete graphics are purposed to deliver IGP-beating performance capable of running modern games at 1080p60. Imbued with rasterisation, ray tracing and AI-specific processing in good measure, Arc is a forward-looking architecture spanning multiple segments.

We’re unsure about ray tracing capabilities for Arc 3, don’t quite understand the lack of HDMI 2.1, and are not overwhelmed by Intel-provided performance numbers.

Nevertheless, another entrant in the discrete GPU market can only be viewed as a good thing. Pregnant with promise, benchmark numbers will reveal all, yet what’s abundantly clear is now’s a great time to be considering a gaming laptop.