Following yesterday’s GTC 2022 Keynote, CEO Jensen Huang introduced the next-gen Hopper GPU architecture and the Hopper H100 GPU. Named after computer science pioneer Grace Hopper, Nvidia did not reveal any core counts or clock speeds, though concrete details about the architecture and throughput were confirmed.

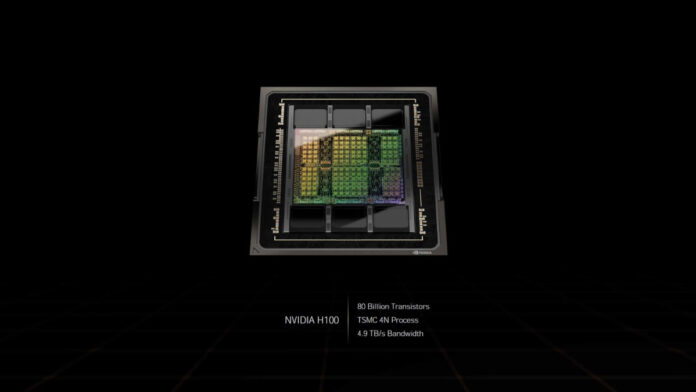

TSMC 4N process node

A monolithic chip being manufactured using a custom TSMC 4N process and wielding a whopping 80 billion transistors, representing a large uptick compared to its predecessor, Ampere A100, housing a mere 54 billion transistors. The A100 launched in 2020 and used TSMC’s 7nm process.

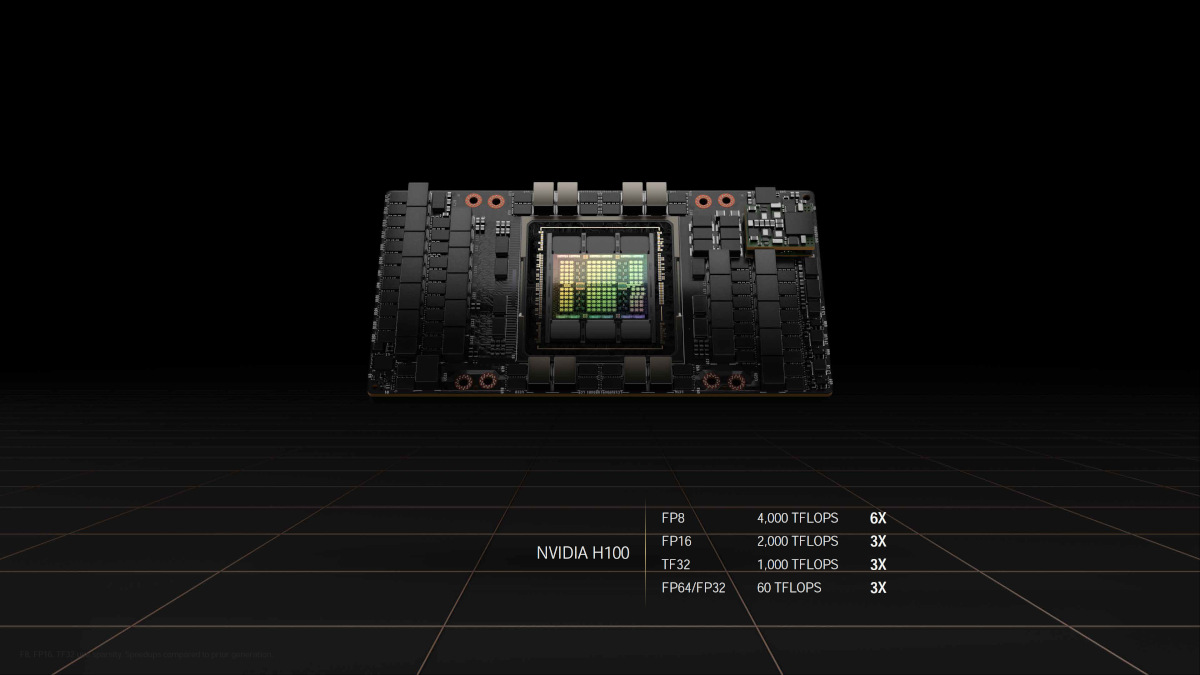

Speaking of performance, Nvidia reckons H100 offers 60TFLOPS of FP32 compute, compared with 19.5TFLOPS for A100. Of more import to users of such a GPU, TensorFloat-32 sparsity performance is up by well over 3x – 1,000TLOPS vs. 312TLOPS. The 3x theme is pervasive when Nvidia refers to H100 in relation to its direct predecessor.

First use of next-gen HBM3 memory

Memory bandwidth is also improving significantly over the previous generation. Offering up to 3TB per second, the 50 per cent increase in bandwidth over the last gen arrives thanks to the use of HBM3, with Nvidia becoming the first accelerator vendor to use the latest-generation version of the high bandwidth memory.

PCIe 5.0 and NVLink interconnect technology

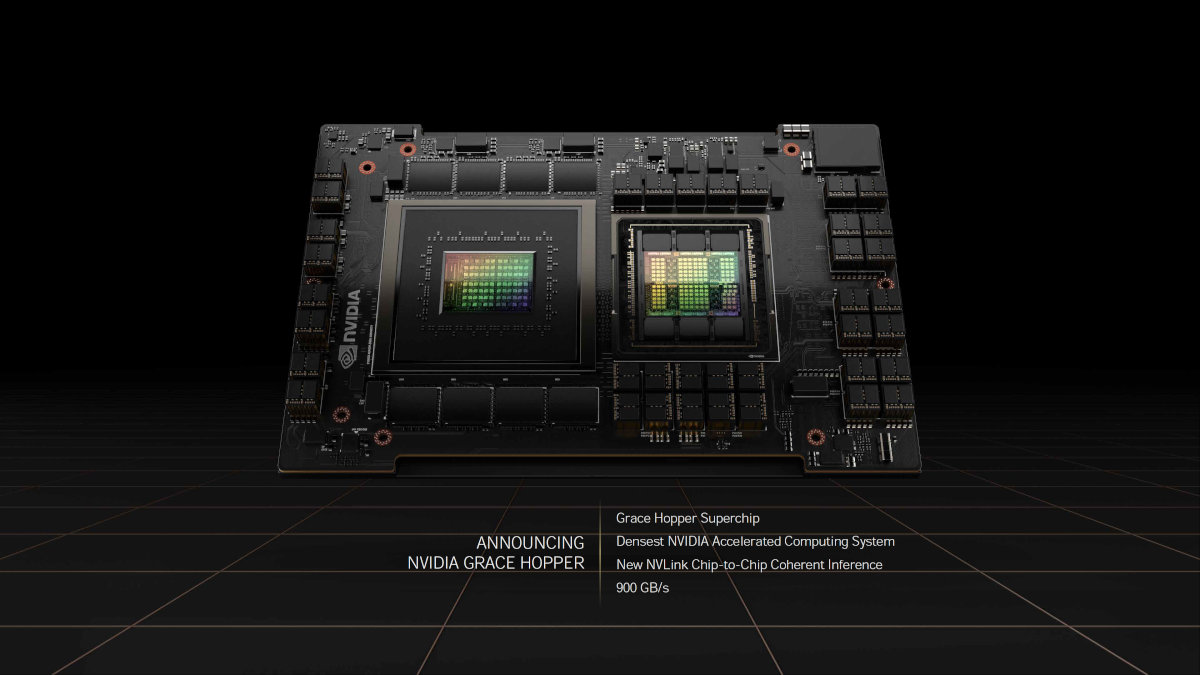

Nvidia also debuted its fourth-generation NVLink interface. The H100’s NVLink 4 implementation provides 900GB/s of bandwidth, compared to the 600GB/s for A100’s third-generation NVLink interface. That’s 50 per cent more bandwidth, while PCIe 5.0 delivers double the throughput of PCIe 4.0, as well.

Transformer Engine

The new Transformer Engine in the H100 chip promises to speed up model training by up to six times, according to Nvidia.

“Transformer Engine, part of the new Hopper architecture, will significantly speed up AI performance and capabilities, and help train large models within days or hours,” said Nvidia’s Dave Salvator.

Grace Hopper Superchip

Wrapping up, Nvidia also announced the first datacenter CPU, appropriately called ‘Grace CPU Superchip.’ Nvidia will offer “Grace Hopper Superchips” that combine a Grace CPU with a Hopper GPU on a single module. NVLink 4 providing a 900GB per second interface between the two.

While Hopper will arrive later this year in the third quarter, the Grace Hopper Superchip won’t be available until Q3 2023.