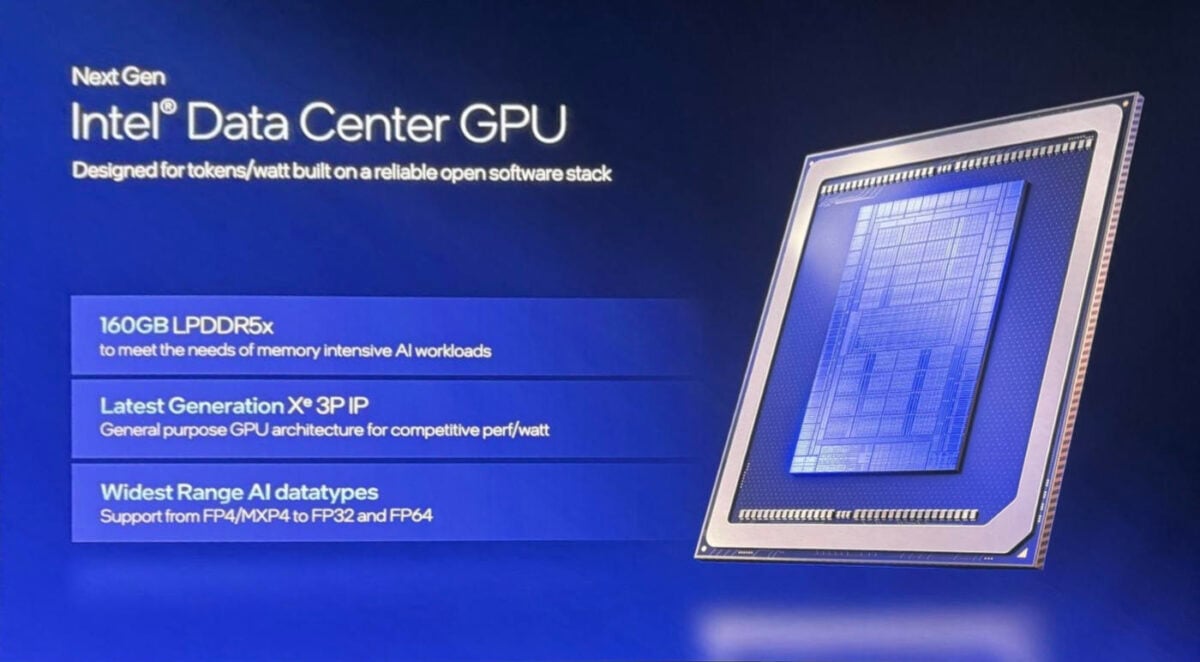

Intel has expanded its AI accelerator portfolio with a new air-cooled GPU targeting servers and data centres. Dubbed Crescent Island, this GPU leverages the company’s next-generation Xe3P Celestial architecture, which is a performance-enhanced version of the Xe3 architecture found on Panther Lake processors. The idea is to offer a cost and power-efficient solution for AI inferencing.

During the 2025 OCP Global Summit, Intel announced this new addition to its machine learning hardware stack, built to meet the growing demands of AI data centres, especially regarding AI inferencing workloads. To make this happen, Intel is packing it with 160GB of memory to allow handling of large language models in an optimised fashion.

While Intel didn’t reveal the full specs of this accelerator, it did share these key features:

- Xe3P microarchitecture with optimised performance-per-watt

- 160GB of LPDDR5x memory

- Support for a broad range of data types, ideal for “tokens-as-a-service” providers and inference use cases

- Support for a wide range of AI data types, such as FP4, MXP4, FP32, and FP64

The standout feature of Crescent Island is undoubtedly its 160GB LPDDR5X memory pool, which marks a big shift from the usual GDDR6, GDDR7, and HBM memory. While LPDDR5X doesn’t offer the same bandwidth as these GPU-optimised memory solutions, it’s far more efficient. LPDDR5X can also be more compact than all of these.

On that subject, considering how high-capacity LPDDR5X chips can offer up to 32GB per package, Intel could use as few as five to reach its advertised 160GB. That said, in order to provide a higher enough bandwidth, it will likely go for 10 (10 x 16GB chips) or 20 (20 x 8GB), depending on the GPU’s memory bus width.

While cheaper than HBM memory, LPDDR5x is an unusual choice for dedicated GPUs, more so in tasks that require a lot of bandwidth. But, Intel seems confident in the power and cost value it offers.

“AI is shifting from static training to real-time, everywhere inference—driven by agentic AI,” said Sachin Katti, CTO of Intel. “Scaling these complex workloads requires heterogeneous systems that match the right silicon to the right task, powered by an open software stack. Intel’s Xe architecture data centre GPU will provide the efficient headroom customers need —and more value—as token volumes surge.”

The brand claims that its solution delivers a workload-centric approach that integrates diverse compute types with an open developer-first software stack, helping speed up system deployments at scale.

Intel has indicated that its open and unified software stack is currently being developed and tested on Arc Pro B-Series GPUs to enable early optimisations, with consumer GPU sampling expected in the second half of 2026.