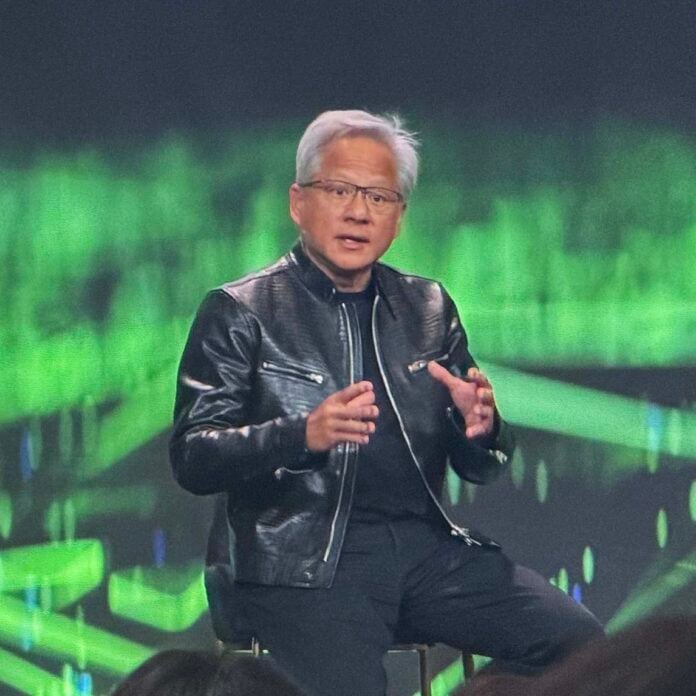

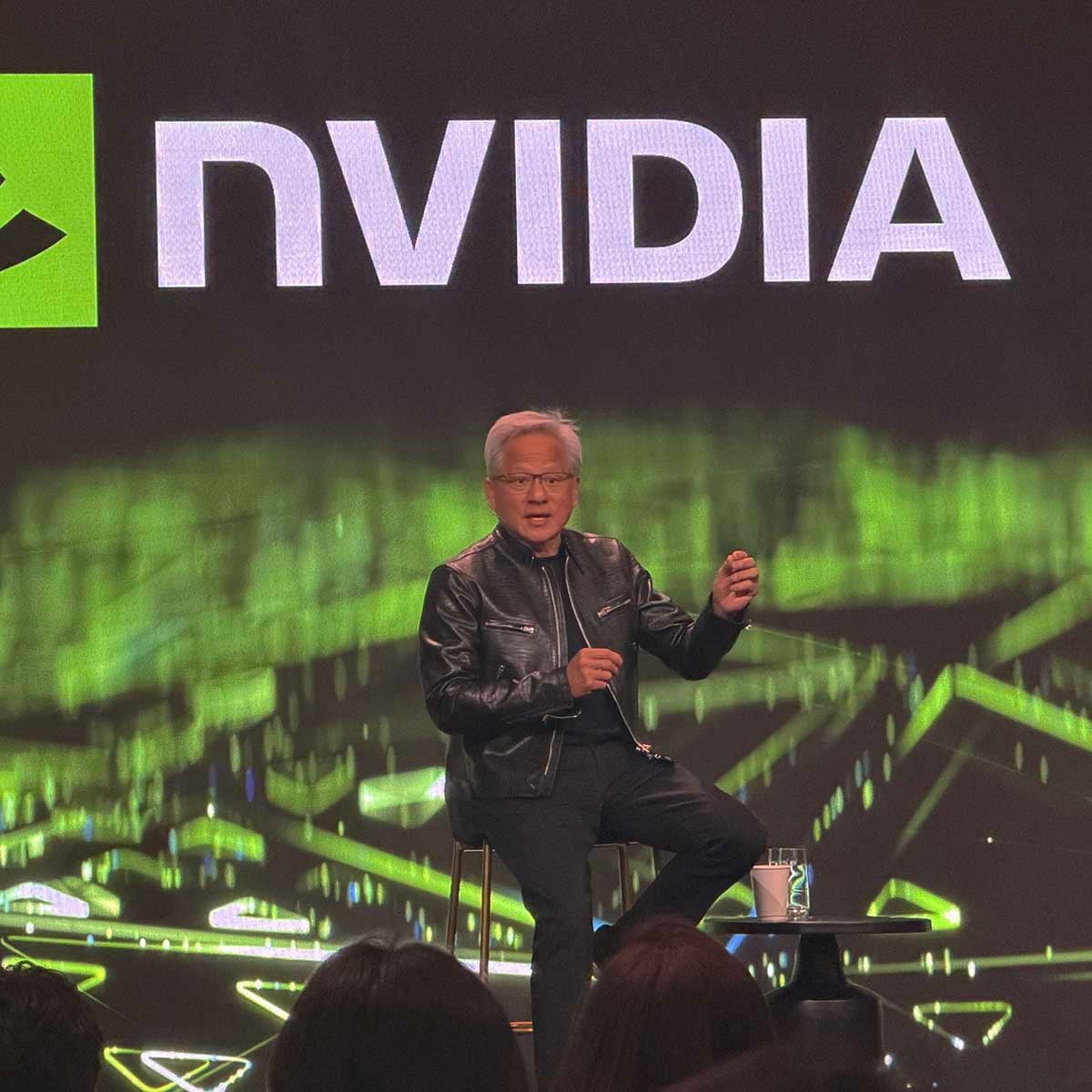

Nvidia GeForce RTX 5090 may represent the final peak of traditional 3D rendering in games, as CEO and founder Jensen Huang envisions future graphics being dominated by AI, including generative AI. During a press Q&A session at CES 2026, I asked Huang if we’re really never going to see a substantial jump in shader power again.

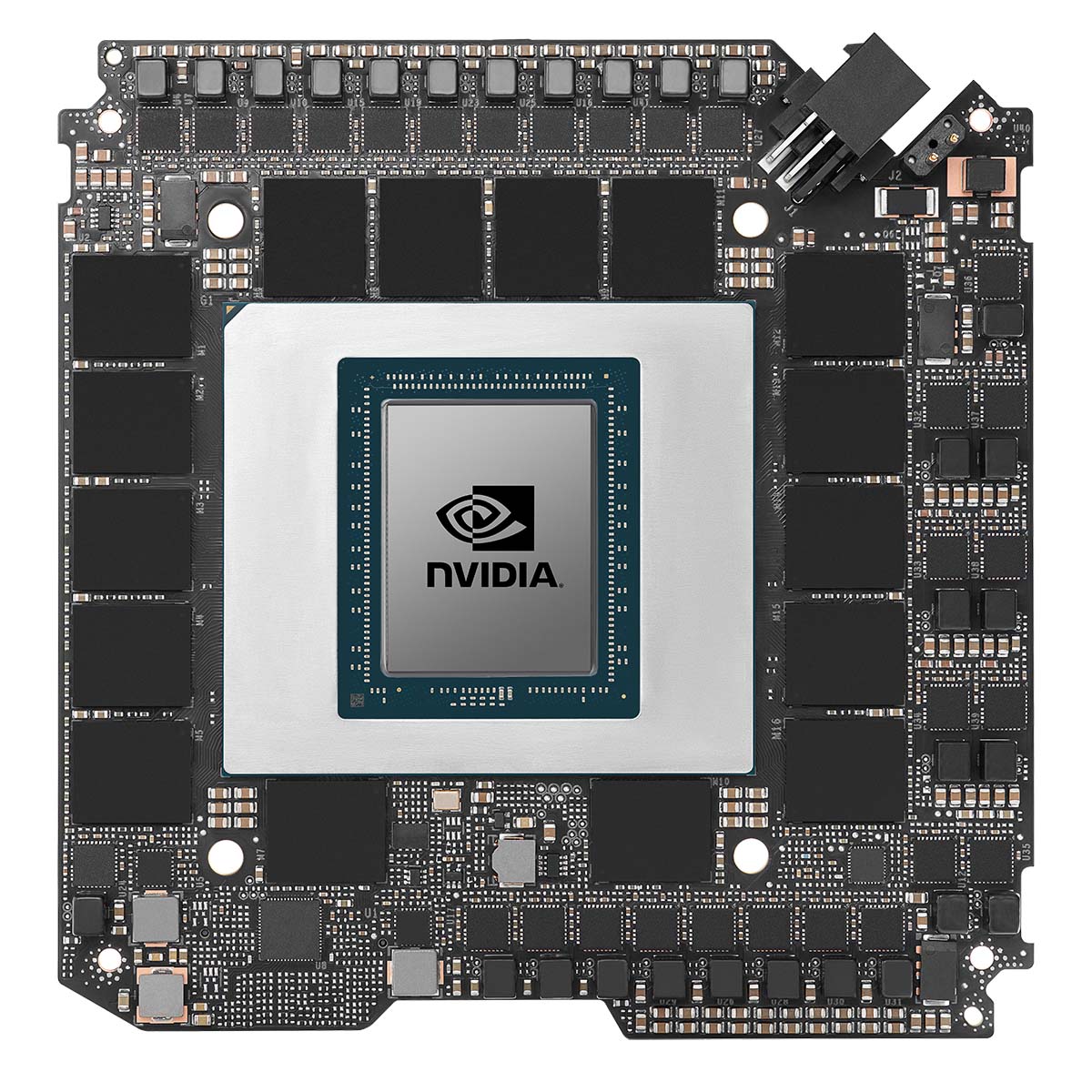

‘It’s not because of anything from [but] the fact that Moore’s Law has ended,’ Huang responded, while swerving the opportunity to provide a firm yes or no. ‘We make these chips as large as we possibly can, and if you look at a 5090 – it’s giant, so you’re really at the limits, the physical limits.’

“In the future, it is very likely that we’ll do more and more computation on fewer and fewer pixels.”

I don’t entirely accept this answer. Moore’s Law is certainly over in terms of timescales, but die shrinks are still happening, even if they’re taking longer, and there’s still scope to increase shader power in a future GPU. We are definitely approaching the physical limits of silicon, though, and even if we’re not quite there yet, you can understand why Nvidia is swapping its priorities and trying to rewrite the rendering rulebook with AI.

‘The bottom line is that, in the future, it is very likely that we’ll do more and more computation on fewer and fewer pixels,’ he says. ‘And by doing so, the pixels that we do compute are insanely beautiful, and then we use AI to infer what must be around it. So it’s a little bit like generative AI, except we can heavily condition it with the rendered pixels.’

The traditional GPU as we know it will still play an important role in Huang’s graphical vision, but AI will play a much larger part. ‘I think that this likely will be the future,’ he tells me, ‘and then the fusion between rendering and generative AI, the generative AI that we all know now, that fusion will likely also happen in the future.’

How will this play out? ‘I think we’re about to see another inflection point coming,’ Huang says. ‘Then, after that, the entire virtual world is going to come alive. All the characters I mentioned earlier [when answering a previous question] will be AI-based, and all the animation will be AI-based, and all of the fluid dynamics will be computational-based, but it will be AI-emulated physics. Everything is just going to look so incredibly real and so immersive. I’m super enthusiastic about it.’

“It’s a little bit like generative AI, except we can heavily condition it with the rendered pixels.”

It’s a vision that’s likely to be greeted with scepticism by many PC gamers, but Huang recalls when Nvidia announced the very first RTX GPUs in 2018. ‘When I first launched it, we got a lot of criticism, you know, for DLSS, and now people love it,’ Huang says. ‘Because ray tracing requires so much computation, the frame rate was incredibly low, so in order to get the frame rate to be high, we used up-resing essentially, right? [We used] AI to generate samples that, otherwise, we didn’t compute at all.’

DLSS has progressed massively since those days. It’s gone from the comparatively simple convolutional neural network (CNN) approach to upscaling, which often resulted in blurriness and nasty smearing effects, to the image wizardry possible with the latest Transformer model.

Meanwhile, frame gen has pushed frame rates to stratospheric levels. I think of frame gen as a useful tool in the box, rather than a magic fix-all. If your game only runs at 15fps, it will still run horribly with DLSS 4.5 and 6x multi frame gen, even if the frame rate counter says 80fps. However, if your starting frame rate is decent, frame gen can work amazing magic.

Jensen Huang clearly thinks this AI-based approach is the way of the future, with more and more of the latest gaming tech based on neural rendering rather than traditional rasterisation. We may not all like it, but we can’t force Nvidia to pack more CUDA cores into its GPUs, and if there’s one company that can use AI to do what it wants, it’s undoubtedly Nvidia.

We’re here at CES 2026, and we’ll be bringing you loads more of the latest news from the show floor. If you want to make sure you’re in the loop, follow our Google News feed by clicking the button below. We also invite you to consider adding Club386 to your Google Preferred Sources, so you’re likely to see all our coverage of new PC hardware from the show. Again, you just need to hit the button below.