Hot on the heels of HPC-related announcements at the ISC event in Hamburg, Germany, Intel is revving up for important launches this year. We now know the next generation of datacentre GPUs are known as Rialto Bridge. Sampling mid-2023 and replacing 100bn transistor Ponte Vecchio, the chip is extremely important for Intel’s AI and machine learning ambitions.

Further out, Intel is integrating high-performance x86 CPU and Xe GPU cores into one powerful, flexible chip known as the Falcon Shores XPU.

Debuting in 2024 and novel insofar as it harnesses a multi-tile approach to building an eclectic number of configurations, Intel’s betting big on addressing traditional and emerging workloads with one base design.

Club386 had the opportunity of chatting to Jeff McVeigh, vice president and general manager of the super compute group, to find out more about Rialto Bridge, Falcon Shores, roadmaps, execution, challenges and opportunities. Presented in a Q&A style for easy digestion, let’s dig right in.

Club386: Can you characterise the ongoing evolution of HPC workloads and the shifting balance between traditional compute and memory to machine learning and AI?

Jeff McVeigh: More and more software is being infused with AI capabilities, but it will also take some time [to proliferate]. There's code that's been around for a long time and it's not going to be replaced overnight. Nevertheless, we definitely see more AI and machine learning happening. That's why Intel's products have been designed to support both highly-accurate double-precision capabilities and lower-precision AI code. It's all still about feeding the beast with data, so we look to supply hardware with as much memory bandwidth as possible. Not every application is sensitive to memory bandwidth, but we see it being a key limiter. With memory speeds not keeping up with overall compute, we need to have both existing technologies and new, future methods.

Club386: Switching gears to ISC announcements, Intel announced the Rialto Bridge GPU, which is the natural successor to Ponte Vecchio. Is there not a danger by announcing Rialto Bridge you are taking the wind out of Ponte Vecchio’s sales, which is not yet shipping to customers?

Jeff McVeigh: Ponte Vecchio has also been announced with LRZ, but I'm not worried about it because there will be overlap in availability between Ponte Vecchio and Rialto Bridge for some time. We're in a race to catch up and lead [referring to Nvidia], so we need to be coming out with a fast cadence of products, and that's what we're doing. We're trying to make it as seamless as possible for customers to switch. While the sockets themselves are not compatible, we'll be providing subsystems that will be. This means you can take a x4 subsystem designed for Ponte Vecchio and upgrade that to Rialto Bridge.

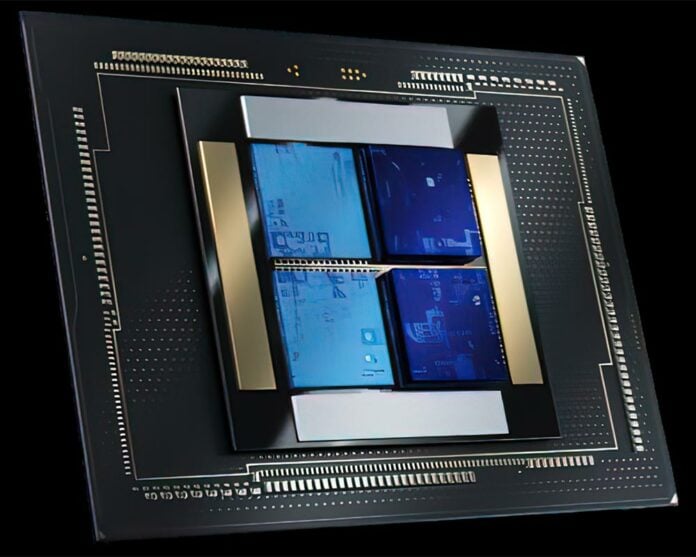

Club386: Looking solely at chip renders, Rialto Bridge appears to be a physical simplification of Ponte Vecchio, even though it will be comfortably more powerful. Can you explain the design change between generations?

Jeff McVeigh: Ponte Vecchio is like our first pancake. The first one is not always perfect. Ponte Vecchio is an extremely sophisticated design, but that sophistication has some of its own challenges. Having fewer tiles, as on Rialto Bridge, allows us to simplify the packaging process. It's a question of improving overall product yield and increasing redundancy.

Club386: Rialto Bridge has more cores than Ponte Vecchio – 160 vs. 128 – but are they functionally identical?

Jeff McVeigh: There will be a little bit more juice within each Rialto Bridge core, but we haven't disclosed what that is yet. What I can say is that it's the same microarchitecture.

Club386: Ponte Vecchio is built partly by Intel and partly by TSMC, on different nodes, depending upon tile. How does Rialto compare from a fabrication point of view?

Jeff McVeigh: We haven't disclosed processes, but we will continue with a mixed Intel and TSMC offering.

Club386: The obvious move for Rialto would be to use the upcoming Intel’s 4 process, right?

Jeff McVeigh: Laughs. I am not going to confirm or deny that one!

Club386: Falcon Shores is another hugely interesting chip. When you’re bringing so much integration into chip – effectively a supercompute node in one socket – isn’t there a high risk of failure, and how do you mitigate against that, even though it’s at least two years out from general release?

Jeff McVeigh: Going from zero to Ponte Vecchio [a 100bn transistor chip] was a very big jump, because we didn't have a baseline to draw on there. Everything needed to come together around connectivity, Rambo cache, Xe cores, and full software stack. It was a big jump. Falcon Shores is another jump, but not as big. We have proven packaging technologies and we've been doing x86 cores for decades. We're also doing lots of simulation analysis from an architectural standpoint, and having Ponte Vecchio and Rialto Bridge in market will help guide us on the exact composition of Falcon Shores. Software compatibility, too, will be much further along by the time it releases. In terms of granularity for Falcon Shores, think of an x86 or Xe core; that is the minimum size we can configure.

Club386: Your Falcon Shores presentation mentions a lot of >5x improvements. For example, in performance/watt, compute density, and memory capacity/bandwidth. What is the baseline you are comparing to?

Jeff McVeigh: Those numbers reference what we had in the market, at that timeframe. From the CPU side, it is the Ice Lake architecture, and do understand that not all the numbers are exactly 5x - that is a general figure we've used.

Club386: Looking at your Super Compute Roadmap, underneath next-generation Emerald Rapids you have Xeon Next, and not, as expected, Emerald Rapids HBM. Can you explain why?

Jeff McVeigh: We're still working through the design of that part. That's why we're not saying it's identical to Emerald Rapids.

Club386: Going back to the general roadmap, is there a possibility smaller-scale customers will not understand the distinction between Sapphire Rapids and the HBM version? Is there more education required on Intel’s part?

Jeff McVeigh: Yes! We need more education. I don't think the distinction is as well understood as it can be. This is why we're starting to show more performance data. We want to seed the market to get the pull-through. Any help you [the press] can do there is appreciated!

Club386: Generally speaking, what are the biggest challenges you are facing right now?

Jeff McVeigh: The overall supply chain. We're building all of these systems, but some of them are 52-week or even 72-week lead times because of other component shortages that you would think would be really easy to get hold of. Right now, we, and everyone else, is treading stock-related water.

Club386: Given recent delays to roadmaps and rival AMD hitting the execution straps quarter after quarter, how can Intel be confident in delivering an ambitious roadmap?

Jeff McVeigh: The Frontier system is a great accomplishment for the industry. To answer your question, I'm very happy with our roadmap. We're coming out with very strong products this year. Then we're going to be on a very aggressive cadence. Our goal is to be leaders, and our roadmap speaks to that.

Club386: Final curveball question: which supercomputer is going to be faster – Intel-powered Aurora or AMD-powered El Capitan?

Jeff McVeigh: Laughs. Oh! I can't predict where El Capitan is going to be. We just know we're shooting for number one with Aurora.